Data documentation enables organizations to bridge the understanding of data and software architecture across teams. Originally developed at LinkedIn, DataHub is the leading open-source metadata management platform. It enables data discovery, data observability, and federated governance for software engineers and analytics teams.

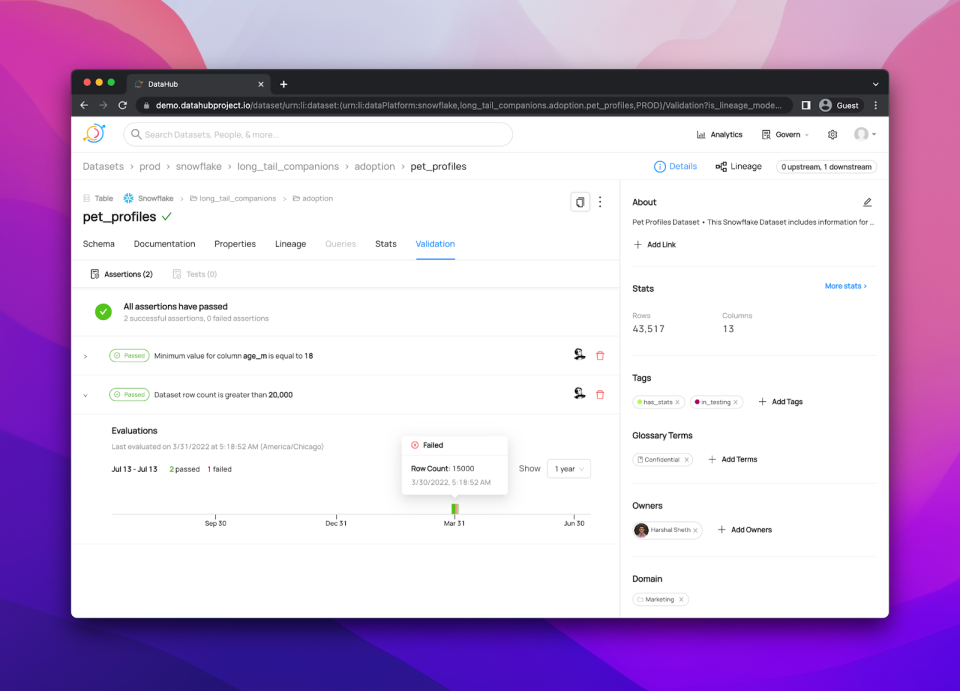

In the spirit of open source, Great Expectations and DataHub integrate seamlessly to give end-users a full 360-degree view of their data assets. Here’s an example of how DataHub surfaces the outcomes of Great Expectations Validations alongside a dataset’s schema, documentation, lineage, and more:

Programmatic Metadata

Technical documentation shares knowledge between the creators and users of a tool. The same is true for sharing knowledge about a dataset: creators of data assets share context about how the data was generated, what it represents, how it should be used, and more. It’s critical that this metadata - i.e. data about data - is presented to business stakeholders in a way that helps them understand the relevance and reliability of a data asset.

Metadata comes in a variety of different formats such as shape, statistical characteristics, and quality of the data. That last one—data quality—can be hard to quantify without additional tooling. That’s where Great Expectations comes in.

Great Expectations enables users to create assertions about assets that are validated when data gets updated. A validation could fail for several reasons. For example, the data is unreliable, has changed, or the understanding of the data itself is incorrect. In any case, the more places the unreliable state of data is surfaced, the less likely a poor decision or mistake will be made.

Why Great Expectations and DataHub?

By leveraging the Great Expectations and DataHub integration, data owners can automatically surface validation outcomes from Great Expectations in the DataHub UI, as well as interact with them via the DataHub API. The API is what makes this integration even sweeter.

Not only are Assertions from Great Expectations displayed as documentation, they are treated just like any other metadata events within DataHub. This allows you to automatically “circuit break” orchestration pipelines in the event your Expectation fails.

With Great Expectations and DataHub, business and technical stakeholders can understand and act on the state of the data in whatever framework suits them best.

Getting started with DataHub and Great Expectations

If you’ve got an engineering-heavy team and notice you spend a lot of time finding data, check out DataHub. Tools like DataHub allow data documentation and data quality to be understood and discussed all in one place. With this new integration, data quality and collaboration work hand in hand to improve data literacy across your organization.

Check out the demo from Great Expectations Community Day below, or get started with DataHub and Great Expectations.