In our previous post on the importance of data quality in ML Ops, we discussed how data testing and data validation fits into ML workflows, and why we consider them to be absolutely crucial components of ML Ops. In this post, we’ll go a little deeper into Great Expectations as one such framework for data testing and documentation, and outline some example use cases of deployment patterns and expectation types that suit the needs of an ML pipeline.

Recap: What is “Great Expectations”?

Great Expectations is the leading open source data validation and documentation framework. The core abstraction lets users specify what they expect from their data in simple, declarative statements. We call them Expectations:

1# assume that batch is a batch of data you want to validate, e.g. a pandas dataframe2batch.expect_column_values_to_be_unique(column=”id”)3# returns: true, if all values in the id column are unique, or false otherwise4

We can also specify that we expect most of the values to match our expectations, based on the existing data and/or domain knowledge:

1batch.expect_column_values_to_be_between(column=”age”, min_value=0, max_value=100, mostly=0.99)2# returns: true, if at least 99% of values in the “age” column are between 0 and 1003

“Mostly” is the kind of expressivity in Great Expectations that’s really useful for analyses of this type, but hard to implement when you’re building it yourself, for example in SQL!

If you haven’t yet tried out Great Expectations, check out our “Getting started” tutorial, which will teach you how to set up an initial local deploy, create Expectations, validate some sample data, and navigate Data Docs (our data quality reports) in about 20 minutes. Don’t forget to come back here when you’re done!

Examples of useful built-in Expectation types

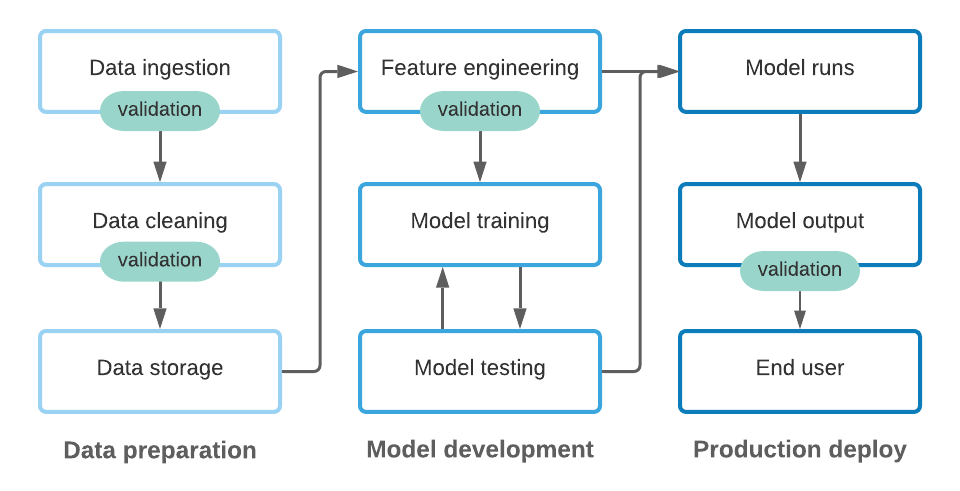

As we mentioned in our first post on ML Ops, we generally think of an ML pipeline in three different stages: In very simplified terms, data is ingested either from internal or external sources, transformed using code, and stored in a data warehouse or similar. Data scientists then use that data for model development, which may include coding additional transformations for feature engineering, training, and testing the model. Once a model is deployed to production, it continuously processes input data to output predictions based on the model configuration. In short, an ML workflow depends on both code and data, which ML Ops needs to both take care of when it comes to data testing.

The Great Expectations library currently contains several dozen built-in Expectation types that let users specify a wide range of Expectations, ranging from simple table row counts and missingness of values to complex distributions of values, means, and standard deviations. Here are just some examples that can be relevant in an ML context, but if you want to see the complete list of Expectation, check out our Glossary of Expectations!

At the most basic level, users can secure all data assets in their pipeline against critical flaws such as NULL values and missing rows or columns with some of the more straightforward Expectations. The names of Expectations are fairly self-explanatory, so we’ve skipped descriptions where they’re obvious!

For example, in order to assert the shape of a table, we can use the following table-level Expectations:

1expect_table_row_count_to_be_between(min_value=90, max_value=110)2expect_table_columns_to_match_ordered_list(column_list=[“id”, “age”, “boro”])3

At a column level, some of the more basic assertions include:

1expect_column_values_to_not_be_null(column=”id”)2expect_column_values_to_be_unique(column=”id”)3expect_column_values_to_be_increasing(column=”id”)4

In addition, as mentioned above, we can add the “mostly” parameter to most Expectations to allow a certain amount of noise in our data.

For continuous data, we can easily configure Expectations that define min and max values in a column and aggregates, such as:

1expect_column_max_to_be_between(column=”age”, min_value=”70”, max_value=”120”2expect_column_mean_to_be_between(column=”age”, min_value=30, max_value=50)3expect_column_stdev_to_be_between(column=”age”, min_value=5, max_value=10)4

Great Expectations also comes with automated Profilers that help you determine these ranges based on historical data. This means you can quickly check whether a new batch of data you’re processing is within those ranges, or whether it contains any outliers. At the time of writing this blog post (September 2020), the Great Expectations team is working hard on making automated Profilers even smarter. (Join the Great Expectations Slack for updates on new releases!)

For categorical data, we can apply value-set and distributional Expectations to ensure that all values in a column only contain specific values, which may be distributed in a certain way. This is particularly relevant in a production environment, where we want to ensure that the data input into our model doesn’t drift too far from the training data. Some of those Expectations include:

1expect_column_values_to_be_in_set(column=”boro”, value_set=[“bronx”, “brooklyn”, “manhattan”, “staten island”, “queens”)2expect_column_kl_divergence_to_be_less_than(column=”boro”, partition_object={})3

In the future, we are planning to develop more Expectation types with even more expressivity to validate model input and output data. Examples may include an Expectation to assert a correlation between two columns, or a Gaussian distribution to test the input to parametric models. Another idea would be Expectations that safeguard the fidelity of vector properties (norm, inner product, etc.) as well as the often-used statistics, such as the ROC curve attributes (precision, recall, etc.). We would love to invite contributions from our community to add new Expectation types for these kinds of use cases. Join our #contributor-contributing Slack channel if you'd like to contribute!

Deployment patterns in ML pipelines

From a data engineering perspective, Great Expectations is a Python library that can easily be integrated into ML pipelines in many different patterns. In this section, we’ll showcase a few examples of how Great Expectations can be deployed in an ML workflow. This is by no means a comprehensive list since there are so many different configurations, workflows, and environments, but we hope it will give you a good idea of how you can implement data validation using Great Expectations!

One way to get started quickly, is to just use the Great Expectations library to write in-line assertions to test data in an interactive environment. The Expectation methods can operate directly on a dataframe that’s loaded into memory, e.g. a Pandas or PySpark dataframe in a Jupyter notebook on a developer’s machine, or a hosted environment such as Databricks.

Our “golden path” workflow is to have a full deploy of a Great Expectations Data Context, where the Expectation creation and validation processes are separated: A team can create Expectation Suites that group Expectations together, store them in a shared location (e.g. check into a repository, an S3 bucket, etc), and load these at pipeline runtime to validate an incoming batch of data. This kind of setup also allows you to generate Data Docs, which can be used to document your assertions about your data and establish a “data contract” between stakeholders, as well as provide a data quality report of every validation run.

In a production environment, Great Expectations can also be integrated into a workflow orchestration framework: An ML pipeline managed by a DAG runner such as Airflow can easily include a node to run data validation by loading an Expectation Suite, either after each transformation step, or at the end of a larger subset of the DAG.

A great example of an ML pipeline using Great Expectations can be found in Steven Mortimer's scikit-learn project. In this example, Great Expectations is integrated into an ML pipeline to validate the raw data, the modeling data, as well as the holdout error data.

And finally, Great Expectations can also be integrated into the CI/CD process to test ingestion pipeline and model code as the code changes. In the same way as integration tests for software are run (usually on test fixtures), Expectations can be used in a test environment against fixture data to validate that the pipeline or model output is still consistent with our Expectations. While this is typically done using a 1:1 comparison of a “golden output” data set generated by running the code on a fixed data input, using Great Expectations eliminates the need to maintain and adjust such a “golden output” data set whenever code is intentionally changed. Moreover, since Great Expectations describes the characteristics of the expected output rather than every single field of it, the same tests (Expectations) work for both the fixed input and the “real” data input when running production pipelines.

Wrap-up

In this post, we’ve shown you some examples of Expectation types and deployment patterns to implement data testing and documentation in an ML Ops environment. These are just some of the examples we’ve seen of how our users apply Great Expectations in their ML pipelines. We’d love to hear from you if you have any interesting use cases! Please stop by in our Slack channel or post on GitHub Discussions.