Our mission is to give you everything you need to trust your data. This year, every enhancement we shipped was meant to empower data practitioners to work with trusted data at their fingertips.

Simply put, data quality is critical to every initiative in your organization. Poor data quality already costs U.S. businesses $3.1 trillion annually, and as AI adoption accelerates, the consequences of low-quality data grow exponentially. In fact, it’s a top concern of companies trying to make AI work for them.

In 2024, only 19% of organizations cited data quality issues as a top challenge. By 2025, that number had more than doubled to 44%, making it the single biggest reported roadblock to AI project success.

This means teams must understand what data can be trusted, why it’s trusted, and what to do when it isn’t, or else they can’t use it.

Whether it’s building the foundation of your data governance program or supercharging AI initiatives, your data must meet expectations to get the job done.

As the most popular data quality framework in the world, we’re proud to facilitate collaboration on data work by ensuring data quality is clear, actionable, and aligned with real business needs.

Here’s everything we built.

Turning Human Knowledge into Trusted Rules

One of the biggest barriers to data quality has always been translation: turning business logic into reliable tests.

ExpectAI

This year, we introduced ExpectAI, a new way to quickly set Expectations that starts with what your data means in the real world.

Instead of crafting rules from scratch, ExpectAI analyzes real dataset patterns and generates suggested Expectations you can review, refine, and approve. Over the year, we expanded ExpectAI beyond Snowflake to PostgreSQL, Databricks SQL, and Amazon Redshift, making AI-assisted rule creation a core workflow across GX Cloud.

The result: faster setup, fewer false positives, and rules that reflect real-world usage.

Writing Tests That Match Reality

Data quality logic is rarely one-size-fits-all. In 2025, we made Expectations dramatically more expressive, without requiring SQL expertise.

Row Conditions, Reimagined

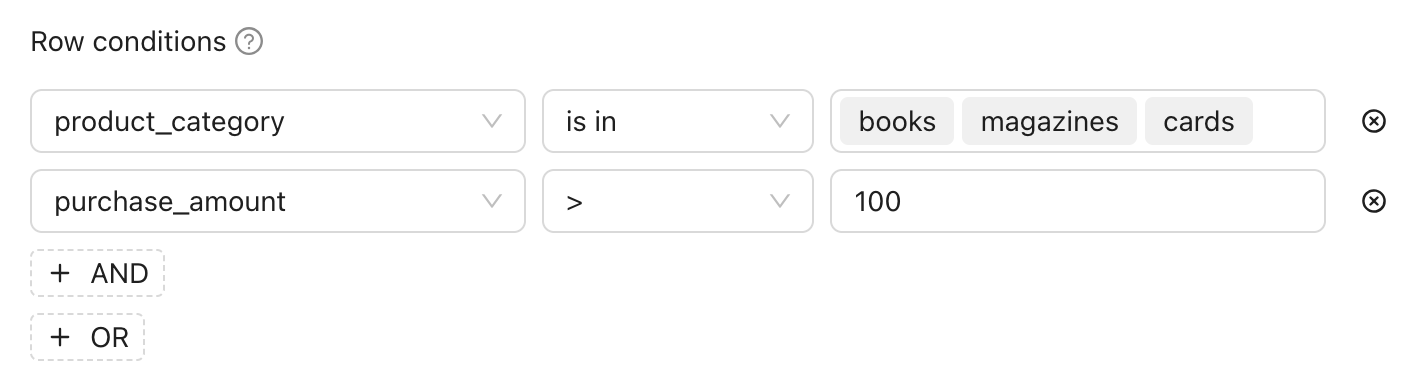

We introduced row condition sections to apply Expectations only where they matter. Then we expanded them to allow for multiple row conditions, enabling:

AND / OR logic blocks

Grouped condition sets

New list-based operators like is any of and is none of

This allows teams to encode nuanced business logic—like conditional requirements, thresholds, and lifecycle-based rules—directly in the UI.

Better SQL Authoring

For teams that do write SQL, we streamlined the experience, allowing for inline SQL prompts, editable AI-generated SQL, and full visibility into generation context.

Together, these updates make Expectations easier to write, review, and trust.

Making Data Health Visible At a Glance

It’s easier to trust data when you can easily see that it meets expectations.

The Data Health Dashboard

We introduced the Data Health dashboard as a shared home for data quality in GX Cloud. It gives teams a single, consistent view of:

Daily health scores that track trends over time

Coverage visibility to show what’s being tested—and what isn’t

Failures ranked by business impact, so teams know where to act first

All available in one click. Engineers get the technical detail they need. Leaders get a clear signal they can act on. Everyone works with the same useful data.

Metric Filters: From Overview to Insight

As teams began using the dashboard, a new need quickly emerged: the ability to zoom in. This year, we added metric filters, allowing teams to slice dashboard metrics by data source, data asset (table or view), or column.

With a single, intelligent search bar, users can narrow the dashboard to exactly what matters—whether that’s a specific warehouse, a critical table, or even all columns named id across systems. Metrics like Data Health, Failed Expectations, and Coverage automatically update to reflect the filtered scope.

This turns the dashboard from a static summary into an interactive workflow. Leaders can assess health for a specific domain or team. Engineers can drill into problem areas without leaving the page. Teams can share focused views that align on which data they’re talking about.

By pairing high-level health signals with precise filtering, the Data Health Dashboard now supports both shared understanding and targeted action.

Coverage Metrics

We also rolled out coverage metrics, giving teams the ability to spot low-coverage data assets instantly, identify missing or stale Expectations, and prioritize where to invest next. It's never been easier to understand and improve your test coverage in GX Cloud.

Detecting Issues Before They Escalate

Instead of waiting for failures, we help you catch problems early.

Anomaly Detection

This year we introduced data completeness anomaly detection to automatically flag unexpected null spikes volume detection to catch sudden row count changes.

Both rely on historical patterns without manual thresholds so teams can detect issues before downstream users do.

Validation Across Multiple Systems

Modern data stacks don’t live in one place. Neither should validation.

Multi-source Expectations

We shipped Multi-source Expectations, allowing teams to compare SQL query results across two data sources like Snowflake, Postgres, and Redshift.

With easy configuration, Multi-source Expectations can detect data drift introduced during the ETL process through discrepancies in schemas, counts, time windows, data types, and precision levels between Data Sources.

Scaling Collaboration with Clear Ownership

As data teams grow, trust depends on accountability.

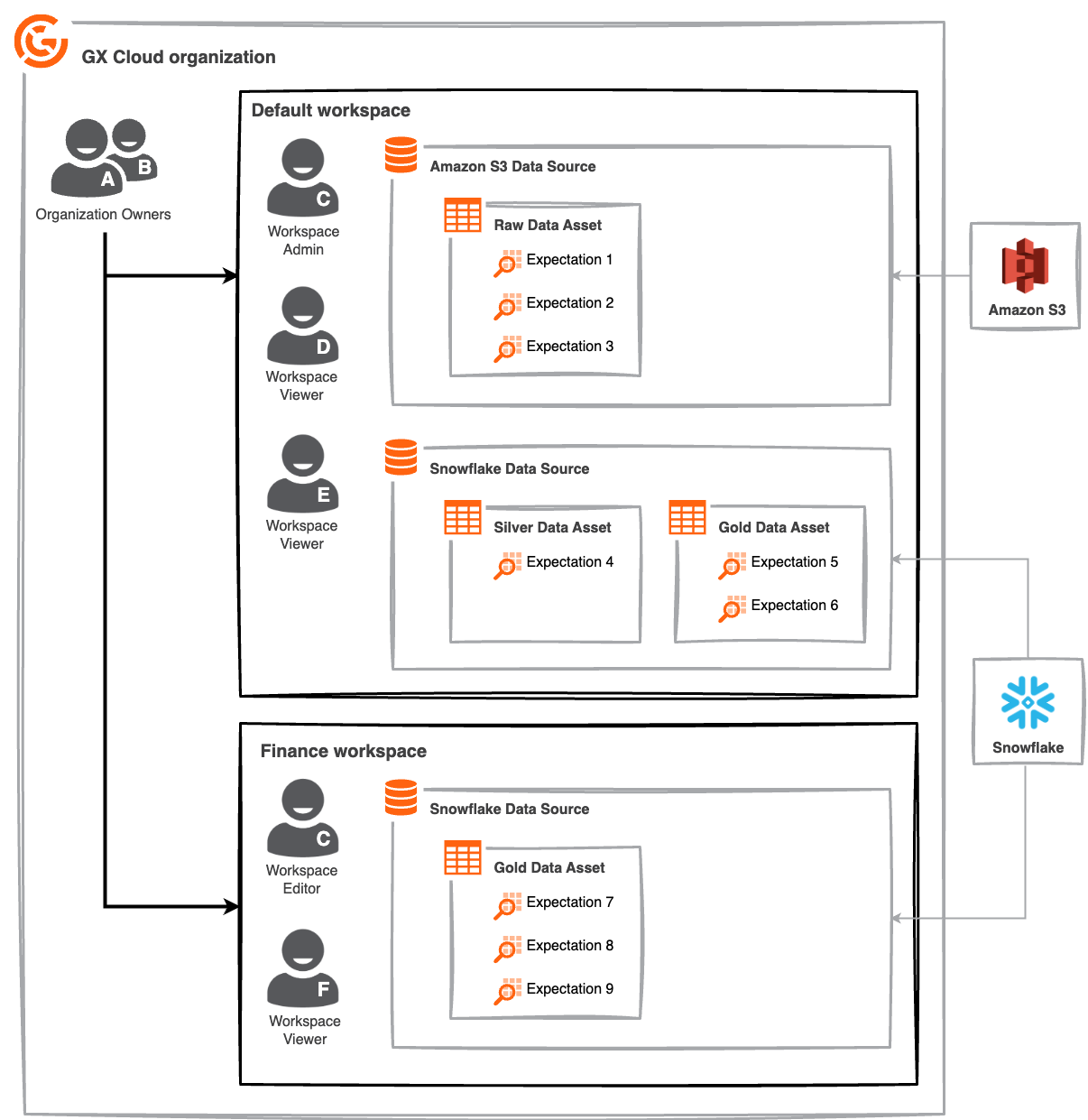

Workspaces

Workspaces introduced team-scoped environments in GX Cloud, with clear ownership boundaries, role-based access, and reduced clutter and risk.

Teams can now collaborate without stepping on each other or overexposing sensitive assets.

Severity tags

Not all failures need the same treatment. Severity tags allow Expectations to be marked as Critical, Warning, or Info, reducing alert noise and ensuring urgent issues get the attention they deserve.

Expanding Where GX Runs

Trust only works if it fits into your existing stack.

In 2025, we expanded Amazon Redshift support, improved SQL type handling, restored Custom Actions in GX Core, and continued hardening execution engines across dialects. We also introduced a centralized view of supported data sources, making it easier to understand coverage and request what’s next.

We also formally sunset GX Core versions ≤ 0.18—clearing the path forward with cleaner APIs, better typing, and a more consistent developer experience.

Looking Ahead

Everything we shipped this year points in the same direction: data quality that’s visible, shared, and actionable.

Whether you’re defining rules, monitoring health, collaborating across teams, or validating across systems, our goal is to make GX the place where trust in data becomes something teams can rely on—together.

Resolving to have better data quality in 2026?

Now’s your chance to try GX Cloud. Start a free trial and join us at an upcoming workshop to get a head start on your data quality initiatives for this year.