I’m sure we all know the feeling of “yes, this is a good idea and we should do this”, but there’s just never the right time for it, until several things come together and it just kind of happens? That’s basically the story of the Great Expectations operator for Apache Airflow! But before we all get cozy around the fireplace and listen to The Great Expectations Airflow Operator Story of 2020, let’s make this official first: We have just released a first version of an Airflow provider for Great Expectations!

The provider comes with a

What does it do?

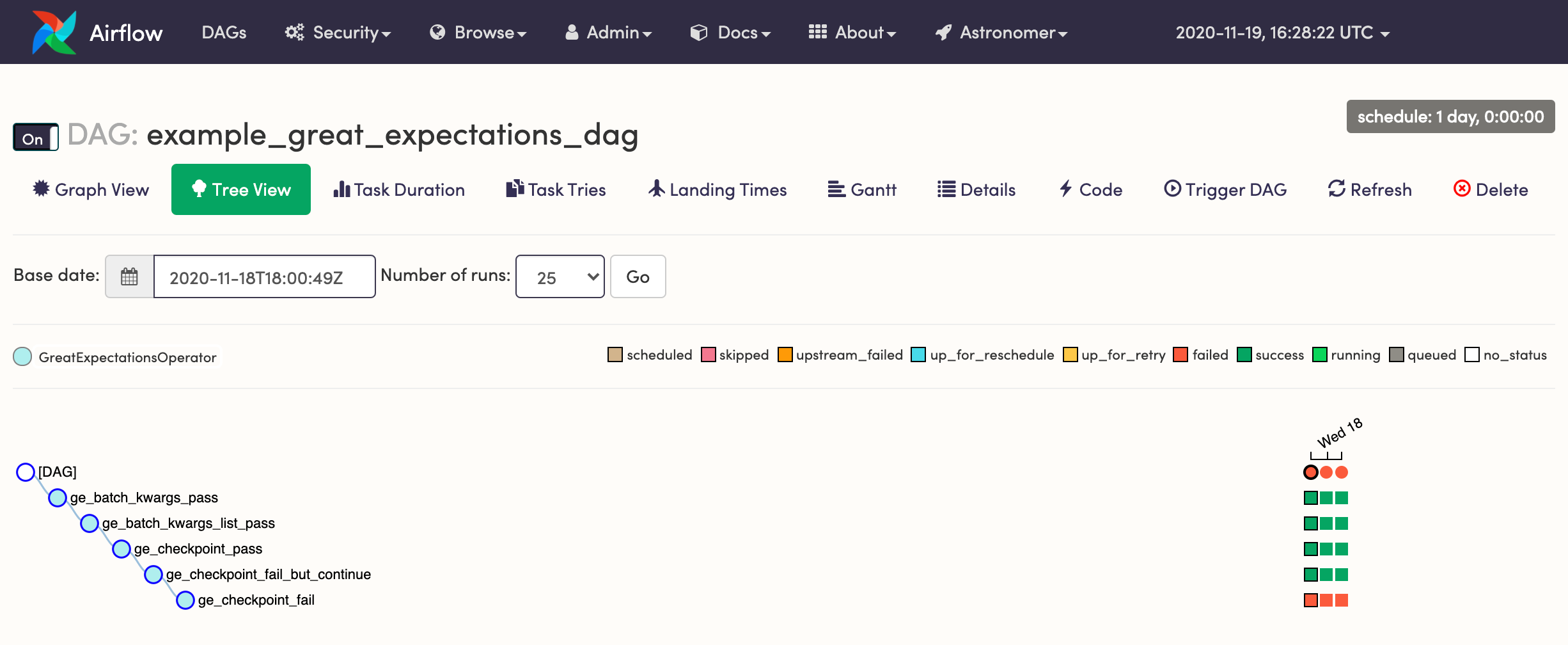

The Great Expectations provider ships with a built-in

Pass in batch_kwargs and an Expectation Suite name, which is then loaded from your Data Context and used to run validation against the batch.

This also works by passing in a list of batch_kwargs and Expectation name dicts.

Simply pass in a pre-configured Checkpoint name (which is a shorthand for both 1 and 2) and kick off validation.

In addition, we also handle the issue of creating Data Contexts. You can either run the operator from a standard Data Context with a great_expectations directory and a

And finally, we’ve built in a couple of smaller features, like a flag to indicate whether to fail the task on validation failure, and an option to specify a custom validation operator. We decided to keep the add-ons to a minimum for a start, but we’re more than happy to welcome suggestions and contributions! If you’d like to contribute, join our Slack channel and hop into #contributors-contributing for some support!

I’ve been waiting for this forever!

Are you ready for a little bit of open source project story time? Here we go! While we know that a lot of Great Expectations users are also Airflow users, and while we got fairly frequent requests for an Airflow operator, we figured “well, running validation with Great Expectations is just Python code and there’s a Python operator, so you’re basically there!”.

This is absolutely true, and it led to a lot of users in the community using Great Expectations in a Python operator, or even taking the next step and writing their own internal operators for Great Expectations. Great! But actually - wouldn’t it be even greater to standardize how users experience Great Expectations and Airflow?

We were only facing two problems: It wasn’t quite clear to us what exactly this “standard” should look like since Great Expectations provides a lot of flexibility in how to invoke validation, so there were several possible approaches. And second, submitting a provider to the core Airflow repository seemed like a project that would require more time than we could really dedicate as a small engineering team that’s heavily focused on core product functionality.

Fortunately, the stars aligned over the past couple of months, and several things happened that helped us accelerate the development:

First, several users from the community responded to a call for Airflow operator proposals

, which gave us a really good idea of the most common ways people would use such an operator.

Second, Brian Lavery from the New York Times data team doubled down on his efforts to develop a BigQuery-specific Great Expectations provider during their hackathon, and even submitted a PR against the Airflow repo.

Third, and this was probably one of the biggest boosters, we started collaborating with the Astronomer team

, who provided fantastic guidance and insights into the Airflow provider ecosystem. Most importantly, we realized that as of version 2.0 (and retrofitted on recent 1.x versions),

Airflow providers are now distributed as their own Python wheels outside of the core Airflow release package

, which allows them to be independently versioned and maintained. This process has empowered community members to begin building and shipping their providers independently of the core repo, as standard practice for developers is now to install a Python package for a provider rather than importing directly from Airflow. Being able to build and ship our provider from our own repo made the entire development process significantly more straightforward for us, and allowed us to knock out a simple provider package within a day. Boom, we have a liftoff!

For me personally, this story really illustrates the power of collaboration across the open source community and related projects in the data infrastructure universe. Working together gives us valuable insights into users’ needs while also creating a wonderful Venn diagram of expertise and perspectives on the space. And yes, I’m going to stop it with the space metaphors now.

What’s next?

You tell us! We’re super excited to be releasing the provider to the world and getting feedback - feel free to submit a GitHub issue or a PR if you have ideas for improvements. In addition, we know that a lot of you would love even more convenience methods for specific backends, so if you’re thinking of implementing a subclass of the base

I also want to explicitly thank Brian Lavery, Nick Benthem, Bouke Nederstigt, and the Astronomer team (specifically Pete DeJoy) for dedicating their efforts to supporting this project, it’s been an absolute joy collaborating with you!