Imagine you're a chef, and data is the array of ingredients in your kitchen. Feature engineering is your favorite culinary tool, essential for extracting the right flavors (features) to whip up some machine learning models. But what happens when your tools aren't functioning correctly, and your recipe descends into chaos?

Let's go on a journey where we understand what a feature engineering platform is and the value it adds. We will identify potential pitfalls that can contaminate your data and equip you with strategies to ensure smooth operations. We'll also see how Fennel uses Great Expectations in a role akin to a kitchen assistant dedicated to ensuring your ingredients (data) are of the best quality.

Before diving deeper, let's first understand what a feature engineering platform is.

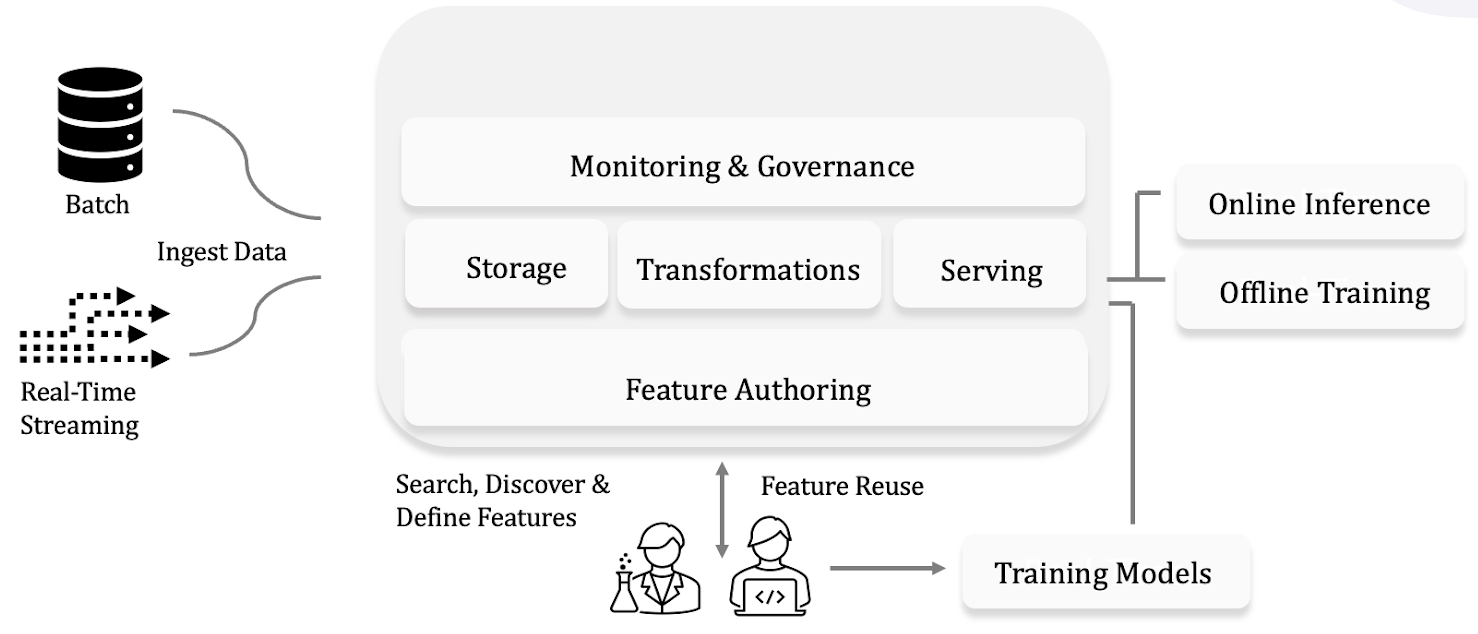

What is a feature engineering platform?

A feature engineering platform is a tool or system that assists in managing the lifecycle of features for machine learning models. Its work begins as soon as raw data arrives, which could be as batch data from sources such as S3, Delta Lake, Snowflake, or PostgreSQL or as streaming data from Kafka or Kinesis.

These platforms execute the required computations to store, transform, and finally make the features available at low latency for the models.

They aid in generating training data while ensuring point-in-time correctness, and monitoring in various layers of the stack, starting from the upstream raw data down to the features being served and optionally the model predictions. They also help manage features, making it easy to define features and helping with search & discovery of existing features, thereby promoting the reuse of features.

Data quality in feature engineering

Data quality is paramount in feature engineering. It is the backbone of any machine learning model, and its integrity directly influences its performance. As the old saying goes, garbage in equals garbage out.

However, maintaining data quality is not without its challenges. Production feature engineering, in particular, presents a unique set of difficulties that can lead to data corruption. Data corruption in feature engineering can stem from various sources:

Changes/failures to upstream pipelines

Time travel

Online-offline skew

Feature drift

Feedback loops

Let’s look deeper into these sources. You can also find information about them in this blog.

Changes/failures to upstream pipelines

Features often depend on a complex network of interrelated data pipelines. Occasionally, the owner of a parent pipeline may inadvertently make changes to an upstream dataset, which can alter the meaning or value of the downstream features.

For instance, an upstream dataset might start exporting data in milliseconds instead of seconds, thereby changing the semantics of the downstream features or introducing silent bugs.

Additionally, pipelines can occasionally fail, which can result in stale features.

Time travel

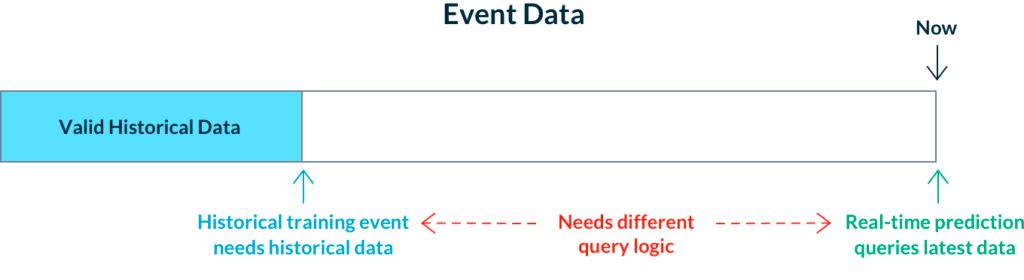

Imagine you’re employed at a bank and are trying to build a fraud detection model. Naturally, you need a training dataset comprising all past transactions. But what you need to keep in mind is that the training data for each transaction should only include events up to, but not including, the timestamp for the event. Otherwise, you would be leaking future data.

For example, if you have a feature `num_fraud_transactions` for every user and include all events until now, you are inadvertently revealing to the model that a fraud has occurred. This is extremely detrimental and can single-handedly undermine the model's performance in production.

It's also easy to see why this task is challenging. Since every row in the training dataset now needs to refer to different time ranges, ensuring point-in-time accuracy can be quite tricky.

Online-offline skew

This is a relatively straightforward case. Training data often consists of a large number of rows and is generally created using SQL pipelines. However, features for online serving typically utilize in-app technology, such as Python or JavaScript, and rely on Redis-like key-value stores to fetch the appropriate data. This can lead to subtle differences in the feature definitions, which can cause feature skew.

Another source of skew is the freshness of the training data when generated. If the features are refreshed on a daily basis, for instance, the features are fresh in the morning but gradually become stale as the day progresses.

Feature drift

Feature drift refers to the phenomenon where the distribution of features in the training and serving data diverges over time. This divergence is often a result of real-world data fluctuations due to factors such as seasonality, disasters, or unforeseen events.

For instance, consumer behavior may change during holiday seasons, natural disasters may disrupt normal patterns, or unexpected global events like a pandemic can drastically alter data trends. As these changes accumulate, the model's performance can start to decline because it was trained on, and thus only fits, patterns from its training period.

This means the model may not accurately capture or predict current trends or patterns, leading to less accurate or even incorrect predictions. Therefore, it's crucial to monitor for feature drift and periodically retrain models with fresh data to maintain their performance and accuracy

Feedback loop

A feedback loop in machine learning occurs when the outputs of an ML model can influence customer behavior, which in turn can skew the feature data by altering content distribution.

For example, a recommendation system might suggest certain products or content to users based on their past behavior. As users interact with these recommendations, their behavior changes, which then influences the data that the model is trained on.

This can create a feedback loop where the model's predictions are continually influencing and being influenced by user behavior. This dynamic nature of the data can lead to changes in the distribution of features over time, which can impact the performance of the model if not properly managed.

How to prevent data corruption

Despite these challenges, there are strategies to prevent data corruption. Let’s explore a few of them.

Monitoring the feature distribution

The essence of training a model lies in understanding the feature distribution within the training data. The model then makes predictions based on live data, the distribution of which should ideally mirror that of the training dataset.

By ensuring similarity between these two distributions, we can preemptively tackle many potential problems. This process involves checking for any significant changes in the statistical properties of your features, such as mean, median, variance, etc. Sudden or unexpected shifts could indicate data corruption or other issues with your data pipeline.

Logging the features

Logging the features is a straightforward strategy that can preempt a wide range of issues, such as online-offline skew and time travel. This method involves logging the feature values during the inference stage, which can later be joined with the labels to create a training dataset.

Notably, this is the approach employed by major companies like Facebook and Google, given the considerable computational expense of generating new training data each time. While this method is simple to implement and understand, it does have a major drawback. It tends to slow down the iteration cycle significantly, as one would have to deploy a new feature in production and then wait for a week or two before enough data is collected for training.

Not suppressing errors

While authoring data pipelines that generate the end feature values, we sometimes catch exceptions or ignore them. These errors are often the first sign of data corruption or other issues. By addressing these errors promptly and failing fast, you can prevent further corruption and ensure the integrity of your features.

Retraining models at regular cadence

Retraining your models regularly with up-to-date data can mitigate issues stemming from data corruption. This is because retraining affords your model the chance to learn from the most recent and accurate data representation. It also enables the detection and rectification of any data corruption that may have occurred since the previous training session.

The most extreme variant of this approach is online training, where models continuously update their parameters as data streams in constantly. While this method can deliver superior performance and is often utilized by large organizations, it's crucial to understand that online training is exceedingly complex to implement and maintain in production. It typically demands a significant amount of engineering effort, and the return on investment may not always justify the effort.

Making resources immutable

Implementing immutability for your data resources implies that once the data pipelines and/or code used to generate the features have been created, they cannot be altered.

This approach can help guard against unintentional or malicious changes to your data that might result in corruption. It also ensures that all users or processes access the same data version, thereby maintaining the consistency and reliability of your features. Users should make it explicitly known to the system when downstream changes are planned, employing mechanisms such as version incrementation

Why monitor with GX?

GX is fantastic for monitoring your features. Here are some of the key benefits of using this resource.

Automated data validation

GX has an automated data validation mechanism. This capability effectively prevents the incorporation of corrupt or inaccurate data into your models, enhancing their overall performance and reliability. It regularly checks for discrepancies in the data and alerts the user if any issues are detected, thereby enabling preemptive actions against potential data corruption.

Facilitates data documentation

GX aids in data documentation by providing a transparent view of your data's behavior over time. It automatically generates Data Docs, offering clear visibility of the data validation results and allowing for a better understanding of the data's evolving characteristics and its overall health.

Efficient data profiling

GX facilitates data profiling by examining and producing statistics on your data, including visual plotting. This can help in the identification of data issues at an early stage, fostering a deeper understanding of the data's structure, and facilitating its successful utilization in machine learning models.

You can also learn new patterns in the data which you might not be aware of, such as the changing demographics of the users using your product.

Enhances test-driven development

GX supports a test-driven approach to data pipeline development. It allows you to assert expectations about your data before it's processed, enhancing the robustness of your data pipelines. It also makes the debugging process easier and more efficient.

Increases trust in results

A very common occurrence in the field of machine learning is you build a model that demonstrates strong offline performance but fails to deliver expected results once deployed in production. Debugging such ML systems can prove exceptionally complex due to many potential issues.

Using GX can significantly bolster your confidence in the feature values. GX helps eliminate numerous hypotheses related to potential data corruption and facilitates quick identification of the actual problems by including rich metadata as part of its Validation Results. Therefore, it not only assists in keeping your ML system in check, but also expedites the debugging process, making your model more reliable and effective in the long run.

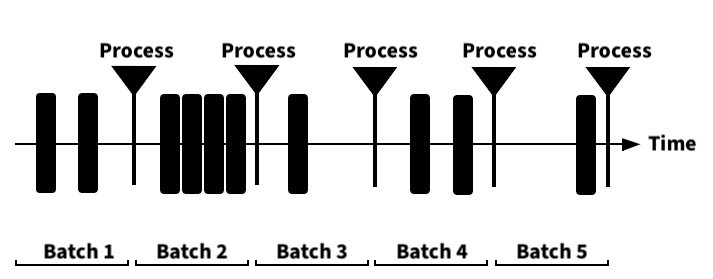

Not just batch data

One noteworthy aspect of GX is its versatility. While it is an excellent tool for validating batch data, it is also adept at handling streaming use cases. This is achieved by partitioning your data into 'mini-batches,' enabling windowed Expectations.

For instance, if you batch your data in one-hour intervals, you can formulate assertions against the data within any time window, maintaining an hour's granularity. This approach offers increased flexibility and accuracy in monitoring your data.

How Fennel uses GX

At Fennel, data correctness sits at the forefront of our design goals. In fact we have an entire section in our docs dedicated to it. You can learn more about it here.

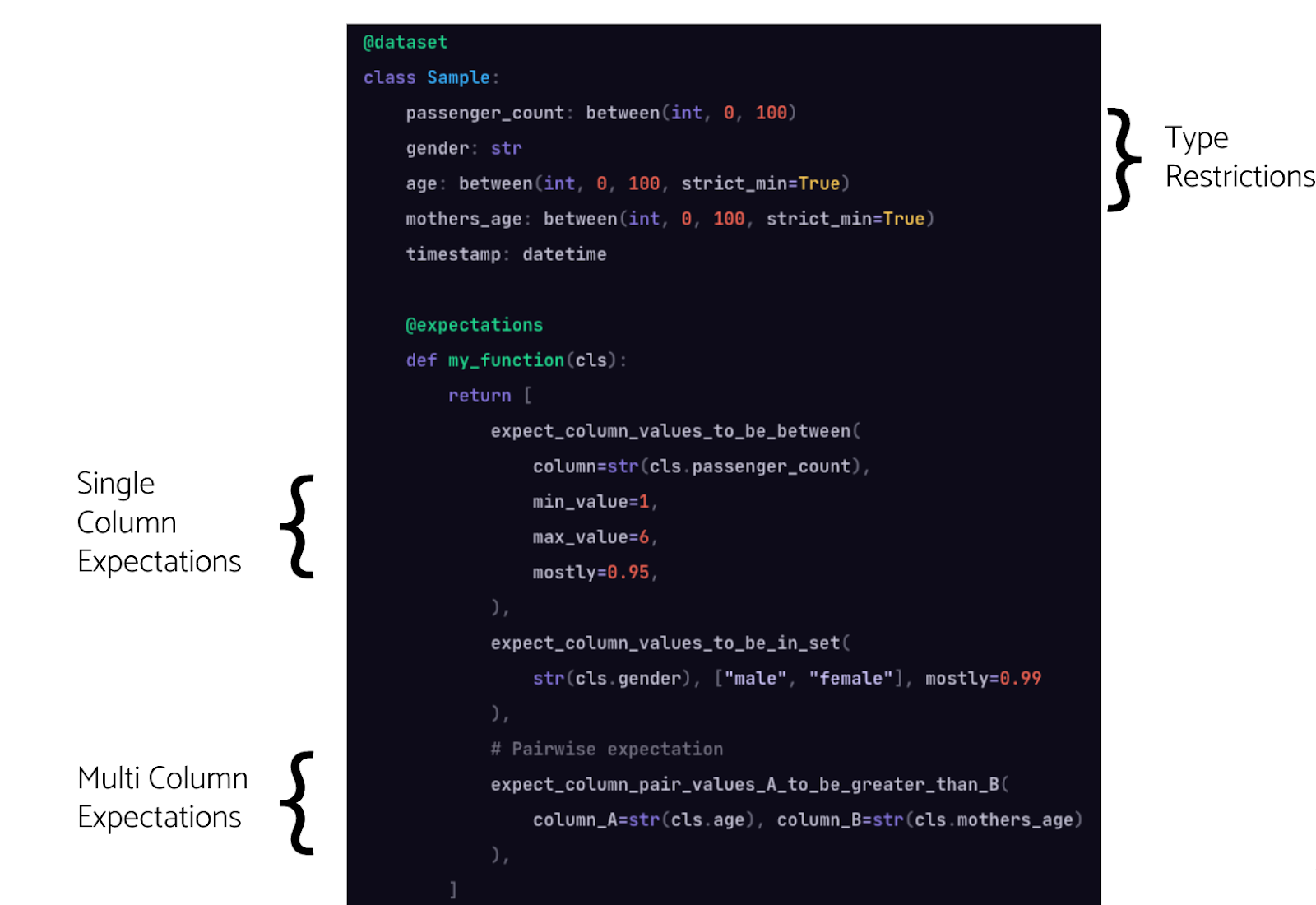

To uphold high standards of data quality, Fennel equips its users with a suite of robust tools. Among these tools, 'Type Restrictions' and 'Expectations' are pivotal.

Fennel has a powerful type system that lets you maintain data integrity by outright rejecting any data that doesn't meet the given types. However, sometimes there are situations when data expectations are more probabilistic in nature.

As an example, you may have a field in a dataset of type Optional[str] that denotes the user’s city (can be None if the user didn't provide their city). While this is nullable, in practice, we expect most people to fill out their city. In other words, we don't want to reject Null values outright, but do want to still track if the fraction of null values is higher than what we expected.

Fennel lets you do this by writing Great Expectations. Once Expectations are specified, Fennel tracks the % of the rows that fail the Expectation—and can alert you when the failure rate is higher than the specified tolerance.

Conclusion

As we progress in AI and machine learning, refining our strategies and maintaining data quality remains crucial. It is with high-quality data that we can truly excel in machine learning.

Data quality and integrity are indispensable aspects of feature engineering. Without them, even the most meticulously designed machine learning models can be rendered ineffective. A feature engineering platform plays a pivotal role in managing these challenges, simplifying data handling, and ensuring the reliability of your model's features. With data quality from GX, Fennel’s platform provides a robust solution to feature engineering while maintaining data quality.

Aditya Nambiar is a founding member of Fennel AI.