Introduction

The world we live in is constantly becoming more connected, more automated, and more sophisticated, generating huge volumes of data at an incredibly fast pace. Organizations need to use this data in the most effective way possible, leveraging their data as an asset to make timely and data-driven decisions.

The challenge: how can the concept of ‘using data effectively’ be converted into practice?

A key ingredient to realizing the promise of an organization’s data is data quality. Organizations need confidence that their data is fit for the purposes they are putting it to, and they can only achieve this confidence if they have robust data quality checks before and alongside their analytics.

For Lakehouse Platform users, there’s a clear choice to enable effective data use: Lakehouse Engine with GX OSS. Lakehouse Engine pairs the power of GX OSS, Great Expectations’ open source data quality platform, with its own framework for analytics and data flow management. The result is a straightforward way for users to confidently leverage insights from their data.

GX OSS + Lakehouse Engine

GX OSS is an open source data quality platform. Its robust community of data practitioners worldwide demonstrate its effectiveness in helping people realize the most value possible from their data.

The Lakehouse Engine is an open source configuration-driven Spark framework, which serves as a scalable and distributed engine for several lakehouse algorithms and powers data flows and utilities for data products. It uses the concept of an ACON (algorithm configuration), which is a dynamic dictionary in Python that uses a series of keywords to represent different steps in data processing.

The Lakehouse Engine uses Great Expectations for the critical task of ensuring the quality of the data. This integration is made as transparent as possible for users since the environment setup is performed in the background; the focus can be on the creation of Expectations for the data.

Using GX OSS in the Lakehouse Engine, validating the quality of data becomes as simple as adding a few more configurations into the ACON. Those are automatically integrated in the data pipelines as one more step when loading data from different sources.

The Lakehouse Engine uses these keywords to represent different steps of data processing in the ACON:

Input specifications (

Transform specifications (

Data quality specifications (

Output specifications (

Terminate specifications (

Execution environment (

GX OSS’ capabilities for validating data content and structure appear in the ACON’s data quality specifications.

Integration with Great Expectations

This blog post is focusing on the validation of live data while performing data loads, but the Lakehouse Engine also offers a specified DQValidator algorithm for validating data at rest according to a tailored schedule. You can use this when the goal is simply to validate data quality and not to move data somewhere else after validation.

As of this post’s publication, DQ features on the Lakehouse Engine powered by GX OSS include:

Two types of data quality:

Validator, which applies the Expectations present in the ACON.

Assistant, which uses GX OSS’ Onboarding Assistant to profile and generate Expectations for your data.

Result sink, which allows data quality results to be written to files or tables.

Fail on error, which creates a configurable process so that data quality errors can trigger pipeline failure. For example:

A max failure can be set so the process fails when more than X% of validations fail.

Critical functions can be set so certain Expectations fail your pipeline.

Row tagging, which tags rows in a table with the results of the data quality checks so people can easily debug which rows have failed certain Expectations. They can then act upon it, or continue to consume and use the data at their own risk.

In the tutorial example section, we provide a more in-depth description of how these features can be used.

Tutorial example

In this example we will be using the ICC mens cricket odi world cup wc 2023 – bowling dataset, available in Kaggle under the CC0: Public Domain license. We will be ingesting this data into our system for analysis and doing data quality checks on it.

Setup

This example has the following prerequisites:

A Spark-enabled environment, so that the Lakehouse Engine framework can run

Lakehouse Engine framework installed

Lakehouse Engine can be installed as any other Python library:

1pip install lakehouse_engineCreating the ACON

As a first step to reading our data with Lakehouse Engine, we need to create an ACON.

This ACON will contain an input specification, a data quality specification, and an output specification. In this case we will be reading the data, assessing its quality, and writing it to a final table.

The ACONs can be stored and passed to the Lakehouse Engine framework in different ways. In this example we will be creating the ACON in a Python notebook and then provide it to the Lakehouse Engine framework to run it as part of the same notebook.

However, you can also design your code in different ways, such as storing all ACONs in a specific directory and then providing the

Input specification

The Lakehouse Engine supports a multitude of different sources (e.g., kafka, jdbc, ftp, …) and file types. In this case there is only one input, which is a csv file with the scoring information of the players that we will be reading using a batch read type.

Since this is a csv file, we need to set that it has a header and the type of delimiter used, in this case

1"input_specs": [2 {3 "spec_id": "cricket_world_cup_bronze",4 "read_type": "batch",5 "data_format": "csv",6 "options": {7 "header": True,8 "delimiter": ",",9 },10 "location": "s3://your_bucket_file_location/icc_wc_23_bowl.csv",11 }12],Note: It is also relevant to highlight that each specification block (

Data quality specification

Now that we have defined how to read the data, we want to define how to check its quality. To do this, we use GX OSS inside the data quality specification to validate the data using different Expectations before storing it.

The data quality specification supports several parameters, some of them being purposely abstracted and defaulted in the library to make it easier for users.

The most important parameters are shown on this section and can be split into:

Base configuration parameters

The data quality functions (Expectations) to apply

The first parameters, shown and described below, are base configurations we are applying to control how we want the data quality process to work.

1"dq_specs": [2 {3 "spec_id": "cricket_world_cup_data_quality",4 "input_id": "cricket_world_cup_bronze",5 "dq_type": "validator",6 "store_backend": "s3",7 "bucket": "your_bucket",8 "result_sink_location": "s3://your_bucket/dq_result_sink/gx_blog/",9 "result_sink_db_table": "your_database.gx_blog_result_sink",10 "tag_source_data": True,11 "unexpected_rows_pk": ["player", "match_id"],12 "fail_on_error": False,The data quality parameters are:

dq_type: whether to validate data (

store_backend: the type of backend,

bucket: the bucket name to consider for the

result_sink_location: location on the filesystem (or S3) where the results of your data quality validations should be stored.

result_sink_db_table:

tag_source_data: whether to tag source data when performing data quality checks on your data. When this use is set to

unexpected_rows_pk: the primary key to be used to identify the unexpected rows (the rows that failed the Expectations) in the source.

fail_on_error: whether to fail the pipeline when the data quality validations fail.

After setting the base configuration parameters, we will define two critical validations to verify that all players are from one of the teams participating in the tournament. These functions are called “critical” because if a single one of these Expectations fails, it will abort your data load even if the

Note that the function names (

1"critical_functions": [2 {3 "function": "expect_column_values_to_be_in_set",4 "args": {5 "column": "team",6 "value_set": [7 "Sri Lanka", "Netherlands", "Australia", "England", 8 "Bangladesh", "New Zealand", "India", "Afghanistan", 9 "South Africa", "Pakistan",10 ],11 },12 },13 {14 "function": "expect_column_values_to_be_in_set",15 "args": {16 "column": "opponent",17 "value_set": [18 "Sri Lanka", "Netherlands", "Australia", "England", 19 "Bangladesh", "New Zealand", "India", "Afghanistan", 20 "South Africa", "Pakistan",21 ],22 },23 },24],Additionally, we will apply the three Expectations below to the data. One of these Expectations has been purposefully set so it gives an error when being processed (the Expectation which checks if the

1"dq_functions": [2 {3 "function": "expect_column_values_to_not_be_null",4 "args": {"column": "player"},5 },6 {7 "function": "expect_column_values_to_be_between",8 "args": {"column": "match_id", "min_value": 0, "max_value": 47},9 },10 {11 "function": "expect_column_values_to_be_in_set",12 "args": {"column": "maidens", "value_set": [0, 1]},13 },14],Output specification

In the output specification, we are defining that we want to write the output into a specified location in S3, which is behind the

The output will be written using the delta file format and always overwriting the data previously available on the target table/location. Note that for the purpose of the tutorial we chose to specify both

1"output_specs": [2 {3 "spec_id": "cricket_world_cup_silver",4 "input_id": "cricket_world_cup_data_quality",5 "write_type": "overwrite",6 "db_table": "your_database.gx_blog_cricket",7 "location": "s3://your_bucket/rest_of_path/gx_blog_cricket/",8 "data_format": "delta",9 }10],Running the data load with data quality

In order to run the process, import the

1from lakehouse_engine.engine import load_data2

3load_data(acon=acon)The full example is available here.

Run results

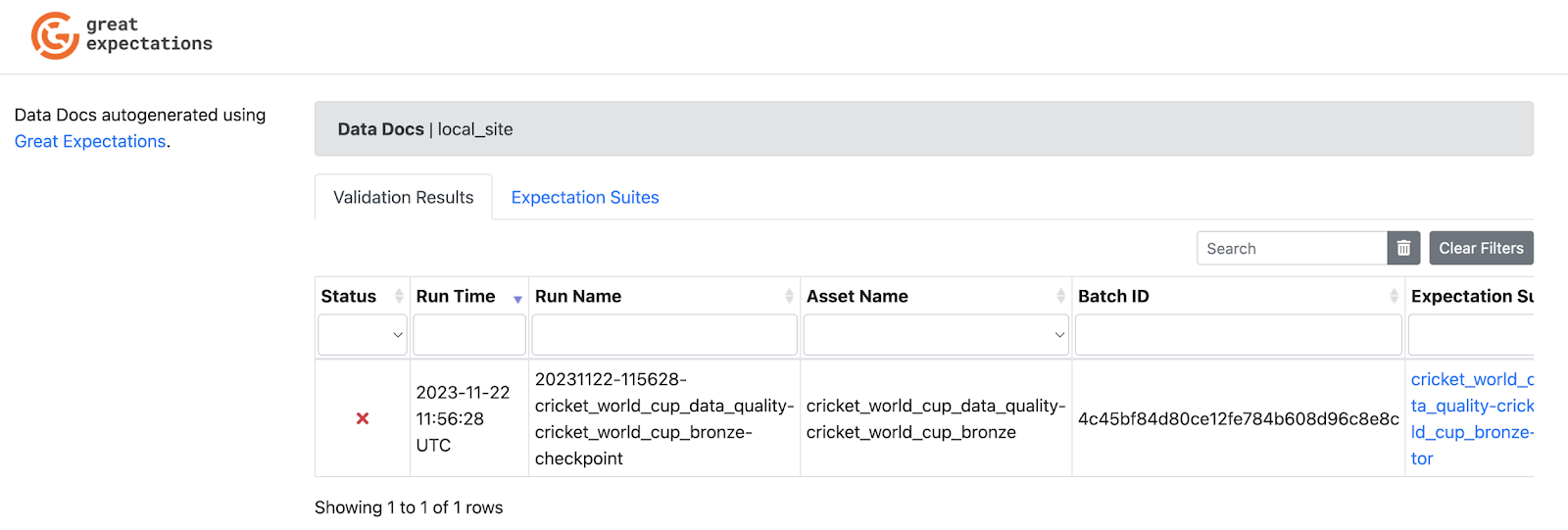

After the data load is performed, the GX data quality checks are created Data Docs, which are now accessible.

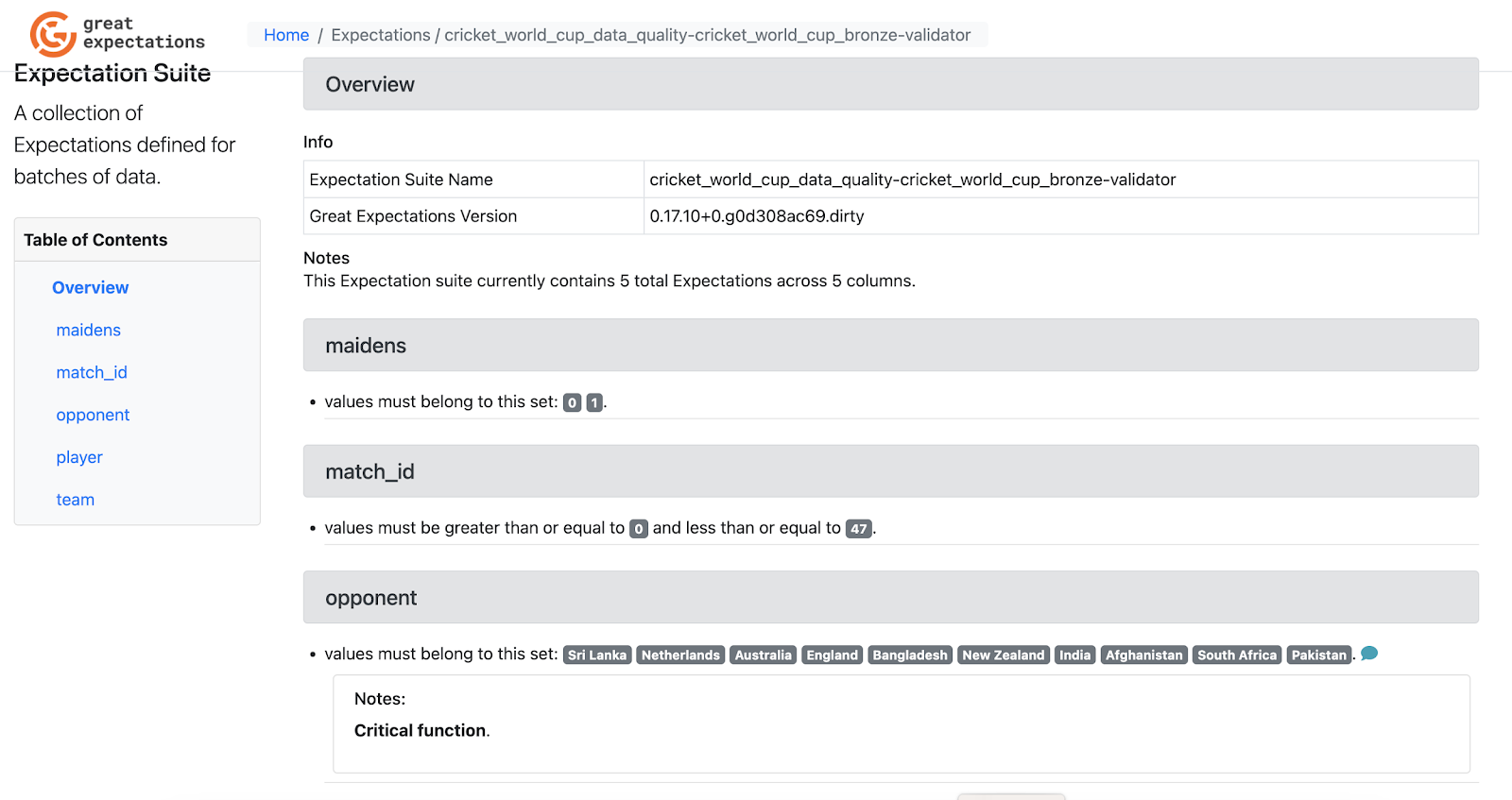

We knew that one of the Expectations we created couldn’t be passed, and as expected you can see the failed data quality validation in this screenshot:

Furthermore, the Lakehouse Engine adds a note “Critical function” to the relevant Expectations, making it easier to identify them when analyzing your results.

Row tagging

Since we have used the row tagging feature (

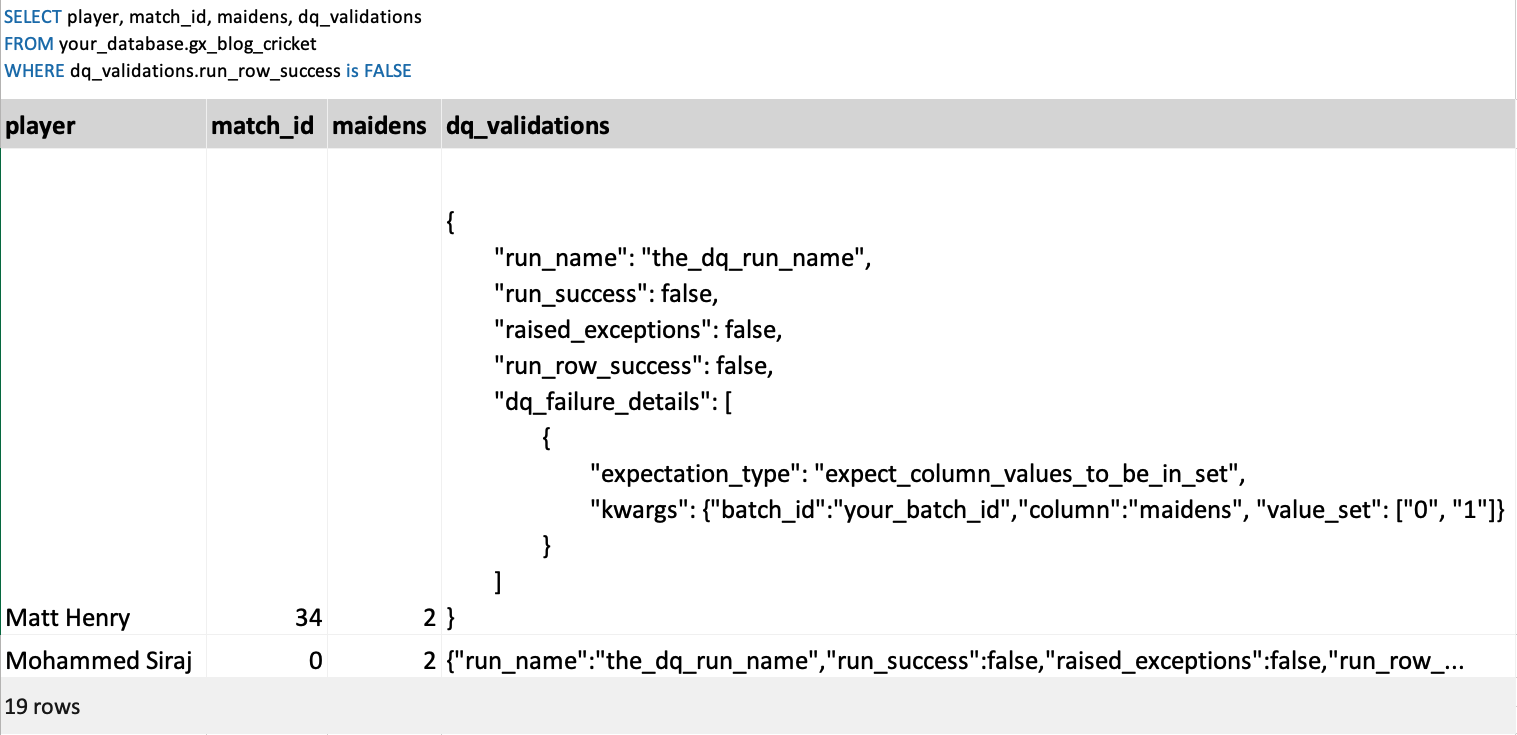

The screenshot below shows the result of a query on the table that our tutorial example just wrote into.

In this query, we are filtering down to the players and match_ids where the row tagging feature applied a

By analyzing these results, we can see that 19 out of 574 rows in the dataset do not pass the Expectation that

With this feature, developers on this data can debug exactly which rows failed on which Expectations and why, so that they can act on that information.

In addition, consumers of the table can easily assess the quality of the data that they are consuming and can decide if they want to keep consuming it at their own risk or if they prefer to stop their analysis (or implement strategies to ignore the problematic rows) until the data quality of the table is improved.

How the result sink improves data quality efficiency

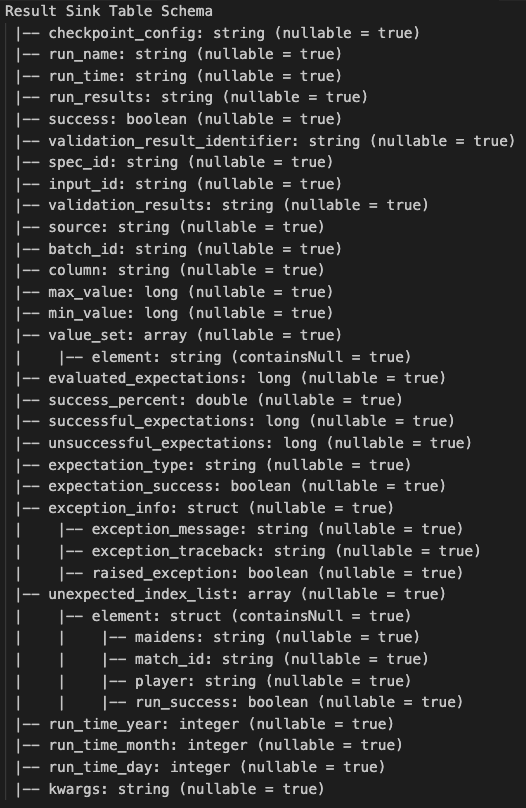

One of the benefits of using the GX OSS integration with the Lakehouse Engine is your ability to use the result sink feature, which you can configure to store the history of your data quality executions in a flat data model (as shown in the sample in the picture below).

The results sink makes it easy for users to bring the results of their GX OSS data quality tests into other analytical and visualization tools. By using results sink to centralize their data quality results over different time periods and across different organizational assets, users can augment GX’s auto-generated Data Docs and JSON files.

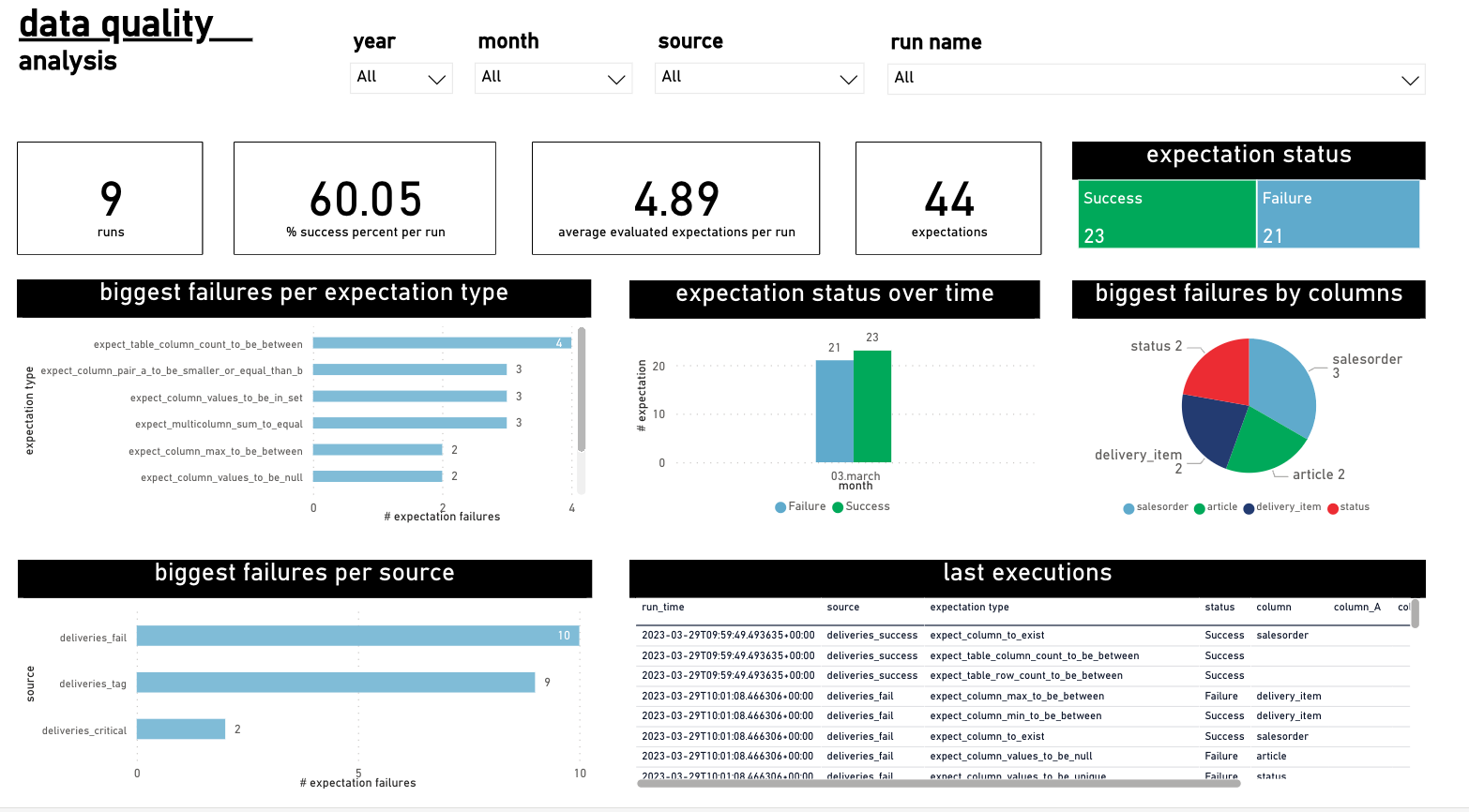

The following dashboard was built using PowerBI as an example of the type of information that can be extracted by analyzing the result sink created by the data quality process. It shows an illustrative representation of the kind of knowledge you can create using the result sink, but is not an exhaustive representation of the data’s analytical possibilities.

With a dashboard, you can answer questions like:

How is the quality in general for my data product?

Which sources of my data product are failing the most?

Is there any pattern on the columns with poor quality?

Note: the screenshot above was not developed on the cricket example dataset used for the tutorial, as that dataset does not offer enough historical information.

Final thoughts

In a world characterized by rapid and continuous change, technological advancements evolve at a relentless pace. Managing these constant shifts in underlying technologies while integrating data from diverse sources, curating it, and ensuring data quality for extracting meaningful business insights is a considerable challenge.

The Lakehouse Engine and its integration with GX OSS empowers users to confidently ingest and process data in a scalable, distributed Spark environment:

Multiple data teams can benefit from its cutting-edge and battle-tested foundations.

Its centralizing effect prevents silos and reduces technical debt and operating costs arising from redundant development efforts.

It allows data product teams to focus on data-related tasks, with less time and resources spent on developing the same code for different use cases.

It facilitates knowledge-sharing among teams by promoting code reuse, expediting the identification and resolution of common issues.

It decreases the learning curve needed to start using Spark and the other technologies that the Lakehouse Engine abstracts.

It streamlines repetitive tasks, ultimately enhancing efficiency.

Using the Lakehouse Engine and GX OSS to ensure data quality and manage your data processes means you can amplify the value of your data-driven decision-making.

More information about the Lakehouse Engine and GX OSS can be found in here:

The Lakehouse Engine is available on GitHub and PyPi, with documentation at adidas.github.io.

GX OSS is available on GitHub and PyPi, with documentation at docs.greatexpectations.io.

You can also join our community spaces:

We hope you enjoyed learning more about the Lakehouse Engine framework, and we sincerely invite you to start using it and even become one of our contributors! 🚀

About the author

José Correia is a senior data engineer, one of the creators of Lakehouse Engine, and a GX Champion.

José extends his additional thanks to Bruno Ferreira, Carlos Costa, David Carvalho, Eduarda Costa, Filipe Miranda, Francisca Vale Lima, Gabriel Correa, Guaracy Dias, Luís Sá, Nuno Silva, Pedro Rocha, Sérgio Silva, Thiago Vieira for their contributions, as well as to the rest of the incredible Lakehouse Foundations Engineering Team!