Let’s be honest: the concept of a “data warehouse” isn’t anything particularly novel. Ingesting data from different sources across an organization as well as third parties, then transforming and storing it in a single location seems like the most logical way to make it easily accessible by users such as analysts and data scientists. How else would you be able to create data insights that are based on data that is generated from applications in different parts of the organization, say, for example, understanding the effectiveness of an advertising campaign by combining the marketing and sales data? Data warehousing, along with the often associated “ETL” (extract, transform, load) pattern, has long established itself as the standard approach to solving these types of problems.

However, with the stellar rise of data warehouse related tools in the data engineering ecosystem, such as Snowflake, Amazon Redshift, and dbt (data build tool), data warehousing has once again become a term that’s on top of everyone’s minds. And even though we often hear from data engineering teams that data quality problems cause severe downstream issues in analytical applications, cost the organization time and money, and eventually erode the stakeholders’ trust in the data, the issue of data quality in a data warehouse environment is far from a solved problem. While some tools in the data warehousing ecosystem come with built-in data testing capabilities, they often only focus on a particular step in a typical data warehouse workflow. On the other hand, data validation with Great Expectations can be implemented at several critical steps across the entire data warehouse workflow in order to establish guarantees for end-to-end data quality. Let’s take a closer look at Great Expectations in a data warehouse environment in this blog post!

What do we mean by “data warehouse”?

While there is no one hard and fast definition of what exactly a data warehouse should look like, we commonly think of it as a large, massively scalable data storage system, which stores data from various sources. But the term “data warehouse” usually doesn’t just denote the storage location, but also the workflows that are associated with it. The term “ETL” (extract, transform, load) is often used to describe a common workflow for integrating data into a data warehouse:

Extract: Data is gathered from various sources, such as operational systems and application databases across an organization, third party vendors, and often individual stakeholders. The data formats tend to be very heterogeneous - we often hear from users who integrate data from several different relational databases, CSV and other file-based formats on FTP servers or cloud storage buckets, manual uploads of spreadsheets, and third party APIs. Typically, a data warehousing workflow runs regular processes to schedule the periodical extraction of all these different data sources into a staging environment.

Transform: Typically, data from a number of disparate sources is not directly usable by the end users of a data warehouse, which means that it is necessary to transform the data in order to make it usable. In the transformation step, the extracted data are usually cleansed, filtered, and joined, in order to provide a clean and usable dataset to the stakeholders.

Load: Finally, in the “load” stage, the data is loaded into the data warehouse, i.e. the storage platform, where it can be accessed by users.

Since there is often no distinction between the execution environment for the “transform” layer and the actual storage, we often also hear the term “ELT” (extract, load, transform) to refer to a process of transforming the data in the same place where it is consumed. And for all of us working with data, we know that no data pipeline ever really matches any of these patterns exactly: Every data pipeline is a unique variation and combination of moving data around, storing, and transforming it.

How does Great Expectations fit into a data warehouse environment?

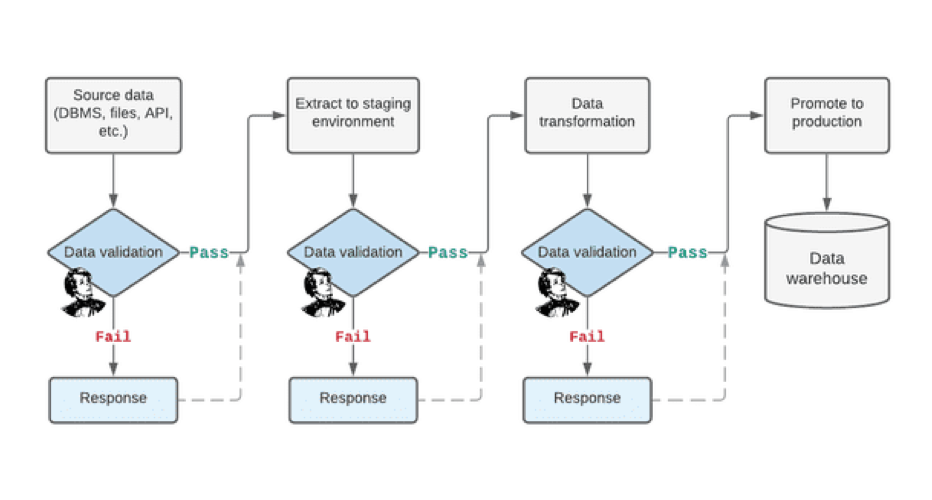

Now, assuming you have a data warehousing workflow in your organization, how does data validation with Great Expectations fit into this environment? We’ll just give you the answer straight away: Any time there is a handoff of data between systems or teams, you should validate the data in order to ensure that it is in the expected state. An example workflow with data validation steps is shown in the diagram below.

Data extraction stage

What does this mean concretely? Well, let’s start at the beginning of the pipeline: The system ingests data from a variety of sources and from different teams and stakeholders. Running data validation on the source data before even ingesting it ensures that there are no issues with it, and can give the data producers immediate feedback if there are in fact any inconsistencies.

For example, if we integrate data from an application database, we can create Expectations that test that the data matches the expected schema, value sets and ranges of numerical values are in line with what we expect, and there are no unexpected NULL values. One of our users told us about a situation where an upstream application team introduced a new dummy value “999” into a column that was used to identify the number of months an employee had been with the organization. Clearly, 999 months (83 years) is not an acceptable value for this data point, but without any data validation, it had easily slipped into the downstream analyses and caused skewed results that were only noticed after a while. By defining Expectations of that data, the ingesting team can establish a “data contract” with the upstream data producers that explicitly specifies the shape of the data and prevents such errors.

Another frequent cause of data inconsistencies at the “extract” stage is incomplete or incorrect ingestion of data. This can be caused by issues such as failures in the code (for example when encountering an incorrect value - hands up if you’ve had a data pipeline break because of special characters in text fields…), or interrupted connections. Data validation with Great Expectations can be implemented in order to test that the extracted data in the staging environment matches the source data and now rows where lost. This can be accomplished by using “Evaluation Parameters” which reference the observed results of previous validation runs to be used in another Expectation.

One more factor to consider when adding data validation steps in a pipeline is the desired response to a failed validation. In the case of validating source data before and after the ingestion stage, we may consider halting the pipeline execution if the data are known to be inconsistent. Especially in environments where you pay for compute, not even continuing to transform and load broken data does not only save time, but can also avoid unnecessary cost.

Data transformation stage

Once we know that we’re dealing with correct - and correctly ingested - source data, we move on to the transformation stage. It is pretty clear that applying various transformations such as filtering and joining data is prone to errors, which may arise from incorrect code, or data that does no longer match what the transformation code expects. At this stage, it is absolutely crucial to test that the transformations were done correctly and that the data is in the correct state after applying them. This can be done in one of two ways:

Run data validation after each transformation step

Run data validation after the entire transformation stage

The former approach is mostly advantageous when paired with pipeline flow control, i.e. when a failed validation result can stop the pipeline execution, which you will want to happen right after the incorrect transformation. The latter approach is more focused on providing a retrospective view of the data. And of course, there can be a combination of the two. For example, it might be beneficial to run data validation right after a transformation in order to prevent a spurious run of a computationally heavy transformation, but then also run validation of all outputs at the end to create a summary data quality report.

Data loading stage

In the same way as testing data after ingesting it from sources, the data loading stage can be another type of “handoff” between systems, which benefits from some form of validation. However, I would claim that the need for and type of validation depends on the type of “loading” that is done here: If the data are moved between different backends, the risk of a failure might be higher than if the loading stage is simply a “promoting to production” type pattern such as renaming a table from “staging” to “prod” or moving it to a different schema.

However, providing documentation about the data quality in the form of Data Docs which are based on Expectation Suites that are run against the final output of the loading step can be extremely valuable for data consumers who want to ensure that the data they’re using is accurate. While oftentimes it is the data engineering teams that own the pipelines that are responsible for adding data tests, we also find that Great Expectations can (and should) be used by data consumers in order to specify “acceptance criteria” for the data they pull from a data warehouse. Consider the definition of data quality in this blog post which really resonates with me: “Data quality is usually defined as a metric on how well a product meets user requirements. Different users might have different requirements for a product so the implementation depends on the user’s perspective, and it is important to identify these needs.”

With respect to data validation, this means that it is possible to not just have “one Expectation Suite to rule them all”, but it is possible to maintain different Expectation Suites for different stakeholders, with different thresholds for failure, and different parameters. For example, analytical application A might require data that is 100% complete and has absolutely no NULL values, whereas application B might be able to accept a small number of incomplete records (say, 10%), which can be set by using a “mostly” parameter in an Expectation definition:

How do I get there with Great Expectations?

In this last section, we’ll take a little step back to look at the inner workings of Great Expectations and discuss how you actually get to the “data validation” step in the above diagram. If you’re completely new to Great Expectations, check out the “Getting started tutorial” first to walk through a basic workflow of setting up a local Great Expectations deployment and create a basic Expectation Suite with some simple Expectations.

A Great Expectations deployment is based around a Data Context, which is basically the project configuration that defines all relevant aspects of Great Expectations:

What data do we want to connect to?

How do we validate the data?

Where do we store assets and metadata such as the actual Expectation Suites, metrics, and validation results, Checkpoints, etc.?

The below diagram shows the high-level architecture of a typical Great Expectations deployment.

Furthermore, we tend to think of a typical production workflow with Great Expectations as a multi-step process: Context configuration, expectation creation, and data validation:

Context configuration (done once per “project”)

Create a Data Context: As mentioned above, a Data Context is the basic configuration of a Great Expectations project. It is usually defined by a

Configure Datasources: This simply means that you tell Great Expectations where to find the data that is used as a reference for building Expectation Suites, and the data that you want to validate with those Expectation Suites. A Datasource configuration will be stored in the

Configure stores and Data Docs (optional): Stores for Expectations, validation results, and metrics are simply pointers to the places where the respective assets are - you guessed it - stored. Similarly, Data Docs (HTML pages) can be configured to be hosted anywhere. By default, Great Expectations stores all of these in the local file system in the root directory of your Data Context.

Expectation creation and Checkpoint creation (done once, iterate as needed)

Create Expectation Suites: This can either be done manually by stating each Expectation individually as a Python method, or by using the automated Profiler that ships with Great Expectations and provides a “scaffold” for Expectations based on what it sees in the data, or - most likely - a combination of both.

Configure Checkpoints: A Checkpoint in Great Expectation is basically a “bundle” of an Expectation Suite and one or multiple data assets that you want to validate. By creating a new Checkpoint, you can easily trigger validation of a data asset.

Data validation (done periodically, e.g. at every pipeline run)

Run validation with a Checkpoint: This is as simple as using the

Respond to the validation results: This final step can take many shapes. Possible responses could be alerts and notifications, halting the pipeline execution, logging, or a simple manual review of the generated Data Docs in order to understand the state of the data. Some of our users have also started tapping into the stored validation results in order to use the results in data quality dashboards, see this Discuss article for an overview.

Steps 1 and 2 are usually only done at the setup stage and initial configuration of Expectations and are generally not scheduled as part of a pipeline. Instead, we recommend treating these configurations and Expectation Suites as configuration assets that are checked into version control. On the other hand, step 3 should be scheduled as part of the regular pipeline runs, as described in the previous sections. Of course, as requirements and data change over time and new data assets are added, it becomes necessary to iterate and refine the existing Expectation Suites and Checkpoints, or add new ones. And this is it - a standard workflow with Great Expectations where you create Expectations and use them to repeatedly validate incoming and transformed data.

We hope this blog post gave you a good idea of how Great Expectations can be deployed in a data warehouse context. We always love to hear from our users who implement Great Expectations for interesting use cases, data warehouse or other - Join the Great Expectations Slack community and say hi!