The AWS Glue Data Catalog is a highly scalable metadata store which provides a uniform repository to keep track of data. Glue and Spark jobs, for example, can use it as an external metastore to consume data stored in data lakes through tables organized into databases. Instead of extracting data directly from Amazon S3, you can query cataloged tables and enforce row and/or column level permissions on it through AWS Lake Formation.

Until recently, Great Expectations didn’t provide capability for getting data through AWS Glue Data Catalog. If you had a data lake in AWS, you had to use the S3 data connector and grant S3 permissions to the IAM role being used to validate data, using Boto3 API calls to get temporary credentials from AWS Lake Formation to access the data.

In partnership with Alex Sherstinsky from the GX team, I have developed a new data connector: the AWS Glue Catalog Data Connector. It allows you to connect to AWS Glue Data Catalog, define the Expectations you want, and validate your tables using Spark.

This way, you can use our beloved GX data quality framework while enforcing row/column level permissions through AWS Lake Formation access control.

I’m Lucas Casagrande, Machine Learning Engineer at AWS ProServe LATAM and, in this guide, I’ll walk you through the step-by-step process to set up this new data connector to connect GX with AWS Glue Data Catalog.

Prerequisites

In order to use the new AWS Glue Catalog Data Connector, you must have an environment with Spark configured with AWS Glue Data Catalog as the metastore. There are several options for this setup, including:

You’re free to choose any of these options: this step-by-step process will work the same. For the sake of simplicity, I have provided a code sample with Terraform to deploy the infrastructure and set up the first environment option (AWS Glue Studio Notebooks). You do not need to use this code sample, but the walkthrough below assumes that you have the same setup in your AWS account.

For the solution deployment, make sure you have the following:

An AWS account to deploy the required AWS resources.

A machine with the following tools installed and configured:

AWS Command Line Interface (AWS CLI)

Terraform

Git

0. Solution Deployment

If you choose to not use the provided code sample, you can skip this section. Otherwise, follow these steps to deploy the solution into your AWS account.

Step 1. Open your device’s command line and clone the Git repository with the code sample:

1$ git clone https://github.com/great-expectations/gx_tutorials.git

Step 2. In the command line, navigate to the directory where the repository was cloned and go to the infra directory.

1$ cd gx_tutorials/gx_glue_catalog_tutorial/infra

Take a look into the main.tf and variables.tf files to get an idea about what is being deployed.

Step 3. In the command line, use the AWS CLI to set up the credentials to login into your AWS Account. Another option is to use environment variables; refer to the following as an example:

1$ export AWS_ACCESS_KEY_ID=***********2$ export AWS_SECRET_ACCESS_KEY=***********3$ export AWS_DEFAULT_REGION=us-east-1

Step 4. Validate that you are authenticated by running the following code:

1 $ aws sts get-caller-identity

This command will output the UserId and Account you are authenticated with.

Step 5. Once you validate your credentials, run the following to deploy the solution into your account:

1$ terraform init & terraform apply -auto-approve

After you execute this command, Terraform will do the following in your AWS account:

Create an Amazon S3 bucket to store data and the GX files.

Copy the NYC-TLC trip data from a public S3 bucket and upload it into the bucket created in the first step.

Create an IAM policy and role to be assumed by the Glue Notebook.

Create a database and a single table in Glue Data Catalog.

Add three partitions (default), to the table.

Step 6. Log in to the AWS Console using the same account used for the solution deployment, navigate to S3 and check the buckets. You should have a bucket named great-expectations-glue-demo-<AWS_ACCOUNT_ID>-<AWS_REGION>.

Step 7. In the AWS Console, navigate to Glue and, in Data Catalog, open databases. You should have a database named db_ge_with_glue_demo.

Step 8. Open the database and check its tables: you should have a table named tb_nyc_trip_data. Open the table and check its partitions; you should find three partitions, named 2022-01, 2022-02, and 2022-03.

The code sample will set up three partitions (by default) using the NYC-TLC Trip Data. If you want to change the number of partitions, make the appropriate changes in the variables.tf file and run the command in step 5 again to update the configuration.

Step 9. In the Glue console, open Jobs in AWS Glue Studio, select Jupyter Notebook and then “Upload and edit an existing notebook”. Select Choose file, upload the GE-Demo-GlueCatalog-QuickStart.ipynb that is in the notebook’s directory of the code repository and create the job.

Step 10. In the Notebook setup, enter a name of your choice and, in the IAM Role, select the role GE-Glue-Demo-Role. This role has been deployed for you as part of the solution deployment. Select Spark as the Kernel and start the notebook.

Once you have completed all these steps, your environment is ready to start working with Great Expectations and AWS Glue Data Catalog.

1. Set up AWS Glue Interactive Session

Before starting coding, be aware that there are a few options to set up an AWS Glue interactive session. In the following code cell, we are using some of them. Refer to the AWS glue interactive session documentation for a complete view of the options available.

Run the next code cell to set up the interactive session:

1%additional_python_modules great_expectations>=0.15.322%number_of_workers 23%glue_version 3.0

Once you run the code, the interactive session will be configured to use Glue 3.0, allocate 2 DPUs, and install the Great Expectations package. Feel free to add or modify this setup as you like.

The interactive session will not be created until we execute some code. Let’s start the session by running the following code cell to import some standard Glue libraries:

1import sys2from awsglue.transforms import *3from awsglue.utils import getResolvedOptions4from pyspark.context import SparkContext5from awsglue.context import GlueContext6from awsglue.job import Job7

8sc = SparkContext.getOrCreate()9glueContext = GlueContext(sc)10spark = glueContext.spark_session11job = Job(glueContext)

The interactive session is started once you run the code above. Check the output to validate that the session has been created.

2. Create a Data Context

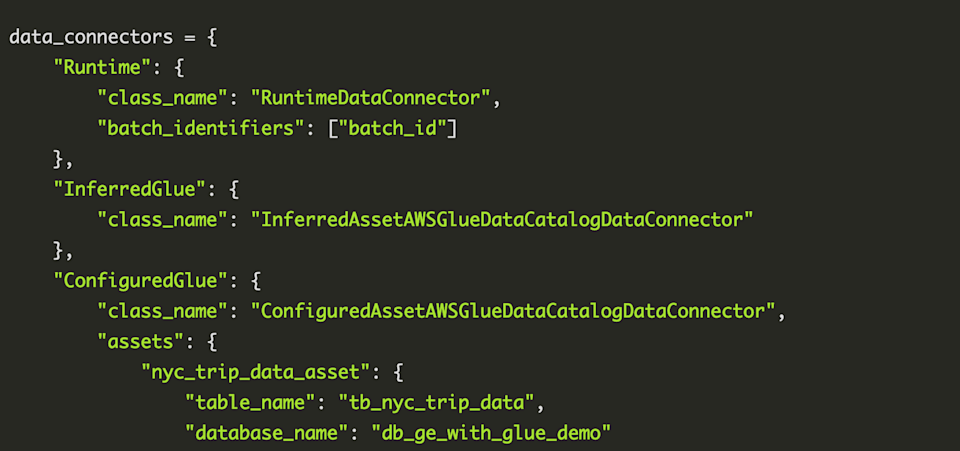

For the sake of simplicity, we will create the GX Data Context in-memory using the Amazon S3 bucket created by Terraform as the store backend. The following example shows a Data Context configuration with a Spark datasource using the new AWS Glue Catalog Data Connector. Refer to the following documentation for details.

Replace the placeholder AWS_ACCOUNT_ID with your account id and run the following cell to create the Data Context:

1from great_expectations.data_context.types.base import 2DataContextConfig, DatasourceConfig, S3StoreBackendDefaults3from great_expectations.data_context import BaseDataContext4

5data_connectors = {6 "Runtime": {7 "class_name": "RuntimeDataConnector",8 "batch_identifiers": ["batch_id"]9 },10 "InferredGlue": {11 "class_name": "InferredAssetAWSGlueDataCatalogDataConnector"12 },13 "ConfiguredGlue": {14 "class_name": "ConfiguredAssetAWSGlueDataCatalogDataConnector",15 "assets": {16 "nyc_trip_data_asset": {17 "table_name": "tb_nyc_trip_data",18 "database_name": "db_ge_with_glue_demo"19 }20 }21 }22}23

24glue_data_source = DatasourceConfig(25 class_name="Datasource",26 execution_engine={27 "class_name": "SparkDFExecutionEngine",28 "force_reuse_spark_context": True,29 },30 data_connectors=data_connectors31)32

33metastore_backed = S3StoreBackendDefaults(default_bucket_name="great-expectations-glue-demo-<AWS_ACCOUNT_ID>-us-east-1")34

35data_context_config = DataContextConfig(36 datasources={"GlueDataSource": glue_data_source},37 store_backend_defaults=metastore_backed,38)39

40context = BaseDataContext(project_config=data_context_config)

Now that you have a Data Context, you can check the available data assets that the AWS Glue Data Connector recognizes in the Glue Catalog. Run the following code to get the list of available data assets:

1from pprint import pprint2

3pprint(context.get_available_data_asset_names())

If you have deployed the provided Terraform code into your AWS account, you’ll see that the InferredGlue connector returned the table db_ge_with_glue_demo.tb_nyc_trip_data. This table was created as part of the solution deployment. If you have more tables in the Glue Catalog, this connector will output all of them.

For a fine-grained control of what tables shall be available, use the ConfiguredGlue example. The Configured Connector requires that you define each database table and its partitions, which you would like to validate.

Be aware that the connector will not check if, for any of the tables you define, the table already exists and/or whether or not you have permissions to access it. Note: If you’re using AWS Lake Formation, check if the Glue IAM role has permissions to access it.

3. Create Expectations

Once you have the Data Context set up, you can start to author the Expectations for your tables. Run the following code cell to create an Expectation Suite and a Validator that you can use to create the Expectations interactively and add them to your suite.

1from great_expectations.core.batch import BatchRequest2

3expectation_suite_name = "demo.taxi_trip.warning"4

5# Create Expectation Suite6

7suite = context.create_expectation_suite(8 expectation_suite_name=expectation_suite_name,9 overwrite_existing=True,10)11

12# Batch Request13batch_request = BatchRequest(14 datasource_name="GlueDataSource",15 data_connector_name="InferredGlue",16 data_asset_name="db_ge_with_glue_demo.tb_nyc_trip_data",17 data_connector_query={18 "batch_filter_parameters": {19 "year": "2022", 20 "month": "03"21 }22 }23)24

25# Validator26validator = context.get_validator(27 batch_request=batch_request,28 expectation_suite_name=expectation_suite_name,29)30

31# Print32df = validator.head(n_rows=5, fetch_all=False)33pprint(df.info())

Be aware that the table we are using in this demo is partitioned by year and month. Check the Table in Glue Data Catalog and you’ll see that there are three partitions, named: 2022-01, 2022-02, and 2022-03.

For each table partition, the connector will create a Batch Identifier that allows us to filter a Batch of data based on its partition values. In the code above, we are loading the partition 2022-03. If you do not specify the partition, the connector will get the first partition available by default.

Note: filtering a partition is only available if your table is partitioned; otherwise, the table data will be loaded in a single Batch.

After running the code cell above, you can start to define the Expectations you want. Use the following code as an example of how to define the Expectations and save them:

1# Define the Expectations2validator.expect_table_row_count_to_be_between(min_value=1, max_value=None)3validator.expect_column_values_to_not_be_null(column="vendorid")4validator.expect_column_values_to_be_between(column="passenger_count", min_value=0, max_value=9)5

6# Save the Expectation Suite7validator.save_expectation_suite(discard_failed_expectations=False)

Validate that the Expectation Suite was saved into the S3 bucket we have defined as our store backend.

4. Validate Data

With the Expectation Suite already created, you can create a Checkpoint to validate data. Run the following code cell to create, save, and run the Checkpoint to get the Validation Results:

1from ruamel import yaml2

3# Create Checkpoint4my_checkpoint_name = "demo.taxi_trip.checkpoint" 5

6yaml_config = f"""7name: {my_checkpoint_name}8config_version: 1.09module_name: great_expectations.checkpoint10class_name: Checkpoint11run_name_template: "%Y%m%d-TaxiTrip-GlueInferred"12action_list:13 - name: store_validation_result14 action:15 class_name: StoreValidationResultAction16 - name: update_data_docs17 action:18 class_name: UpdateDataDocsAction19 site_names: []20validations:21 - batch_request:22 datasource_name: GlueDataSource23 data_connector_name: InferredGlue24 data_asset_name: db_ge_with_glue_demo.tb_nyc_trip_data25 data_connector_query:26 batch_filter_parameters:27 year: '2022'28 month: '03'29 expectation_suite_name: {expectation_suite_name}30"""31

32# Save Checkpoint33_ = context.add_checkpoint(**yaml.load(yaml_config))34

35# Run Checkpoint36r = context.run_checkpoint(checkpoint_name=my_checkpoint_name)37pprint(r)

🚀🚀 Congratulations! 🚀🚀 You’ve successfully connected Great Expectations with your data through AWS Glue Data Catalog and ran Validations on the partitioned tables in the catalog.

5. Clean up

To avoid incurring charges, in your device’s command line, go to the infra directory and run the following to destroy the resources created by the provided Terraform code:

1$ terraform destroy -auto-approve

As we have created the notebook outside of Terraform, you will need to delete it manually. To do so, open the AWS console and go to Glue. In the Glue console menu, open Interactive Sessions and delete the one that was opened by the notebook.