Machine learning has been, and will continue to be, one of the biggest topics in data for the foreseeable future. And while we in the data community are all still riding the high of discovering and tuning predictive algorithms that can tell us whether a picture shows a dog or a blueberry muffin, we’re also beginning to realize that ML isn’t just a magic wand you can wave at a pile of data to quickly get insightful, reliable results.

Instead, we are starting to treat ML like other software engineering disciplines that require processes and tooling to ensure seamless workflows and reliable outputs. Data quality, in particular, has been a consistent focus, as it often leads to issues that can go unnoticed for a long time, bring entire pipelines to a halt, and erode the trust of stakeholders in the reliability of their analytical insights:

”Poor data quality is Enemy #1 to the widespread, profitable use of machine learning, and for this reason, the growth of machine learning increases the importance of data cleansing and preparation. The quality demands of machine learning are steep, and bad data can backfire twice -- first when training predictive models and second in the new data used by that model to inform future decisions.” (tdwi blog)

In this post, we are going to look at ML Ops, a recent development in ML that bridges the gap between ML and traditional software engineering, and highlight how data quality is key to ML Ops workflows in order to accelerate data teams and maintain trust in your data.

What is ML Ops?

Let’s take a step back and first look at what we actually mean by “ML Ops”. The term ML Ops evolved from the better-known concept of “DevOps”, which generally refers to the set of tools and practices that combines software development and IT operations. The goal of DevOps is to accelerate software development and deployment throughout the entire development lifecycle while ensuring the quality of software by streamlining and automating a lot of the steps required. Some examples of DevOps most of us are familiar with are version control of code using tools such as git, code reviews, continuous integration (CI), i.e. the process of frequently merging code into a shared mainline, automated testing, and continuous deployment (CD), i.e. frequent automated merges of code into production.

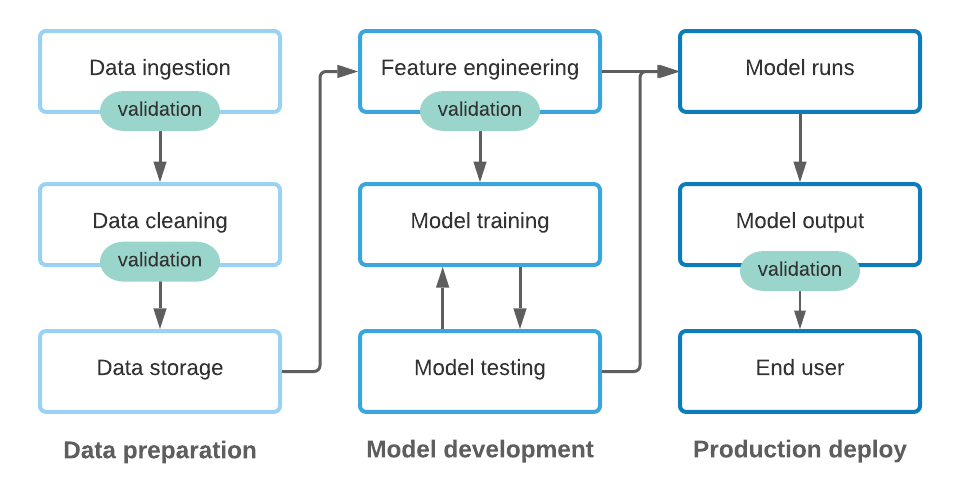

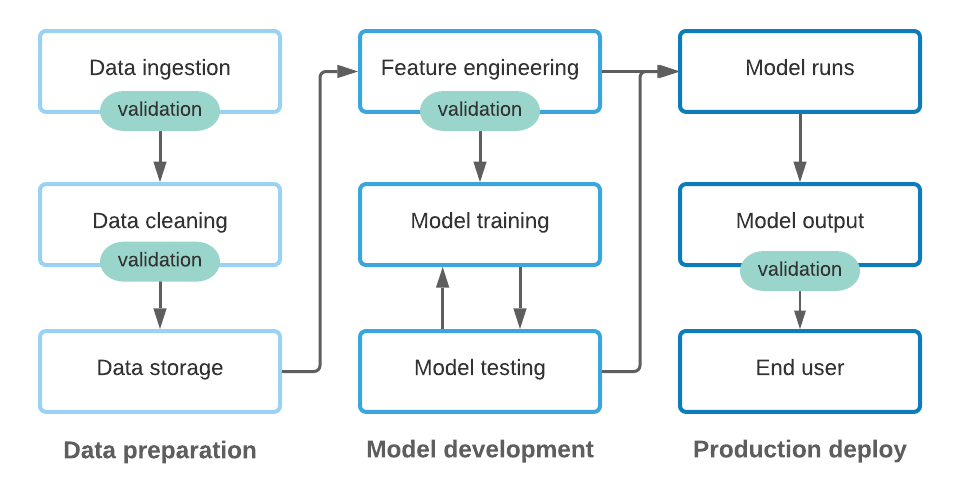

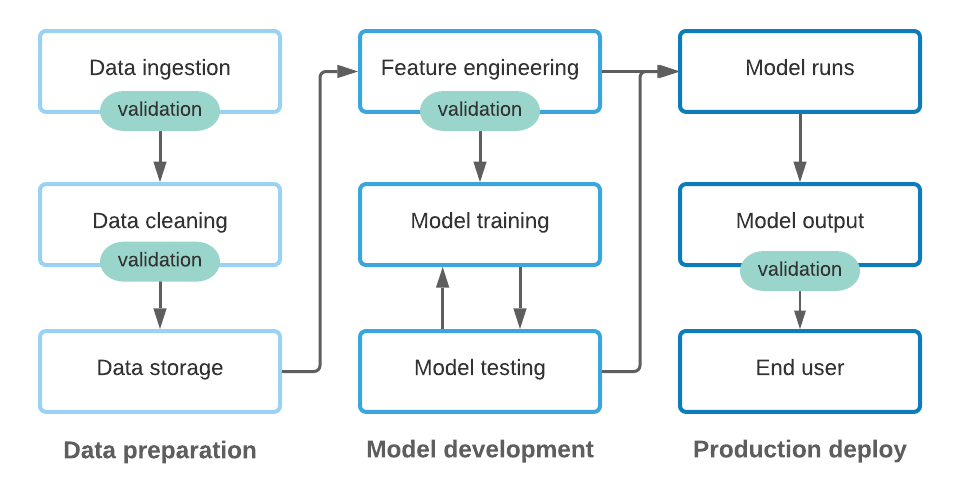

When applied to a machine learning context, the goals of ML Ops are very similar: to accelerate the development and production deployment of machine learning models while ensuring the quality of model outputs. However, unlike with software development, ML deals with both code and data:

Machine learning starts with data that’s being ingested from various sources, cleaned, transformed, and stored using code.

That data is then made available to data scientists who write code to engineer features, develop, train and test machine learning models, which, in turn, are eventually deployed to a production environment.

In production, ML models exist as code that takes input data which, again, may be ingested from various sources, and create output data that’s used to feed into products and business processes.

And while our description of this process is obviously simplified, it’s clear to see that code and data are tightly coupled in a machine learning environment, and ML Ops need to take care of both.

Concretely, this means that ML Ops incorporates tasks such as:

Version control of any code used for data transformations and model definitions

Automated testing of the ingested data and model code before going into production

Deployment of the model in production in a stable and scalable environment

Monitoring of the model performance and output

How does data testing and documentation fit into ML Ops?

Let’s go back to the original goal of ML Ops: to accelerate the development and production deployment of machine learning models while ensuring the quality of model outputs. Of course, as data quality folks, we at Great Expectations believe that data testing and documentation are absolutely essential to accomplishing those key goals of acceleration and quality at various stages in the ML workflow:

On the stakeholder side, poor data quality affects the trust stakeholders have in a system, which negatively impacts the ability to make decisions based on it. Or even worse, data quality issues that go unnoticed might lead to incorrect conclusions and wasted time rectifying those problems.

On the engineering side, scrambling to fix data quality problems that were noticed by downstream consumers is one of the number one issues that cost teams time and slowly erodes team productivity and morale.

Moreover, data documentation is essential for all stakeholders to communicate about the data and establish data contracts: “Here is what we know to be true about the data, and we want to ensure that continues to be the case.”

In the following paragraphs, we’ll look at the individual stages in an ML pipeline at a very abstract level, and discuss how data testing and documentation fits into each stage.

At the data ingestion stage

Even at the earliest stages of working with a data set, establishing quality checks around your data and documenting those can immensely speed up operations in the long run. Solid data testing gives engineers confidence that they can safely make changes to ingestion pipelines without causing unwanted problems. At the same time, when ingesting data from internal and external upstream sources, data validation at the ingestion stage is absolutely critical to ensure that there are no unexpected changes to the data that go unnoticed.

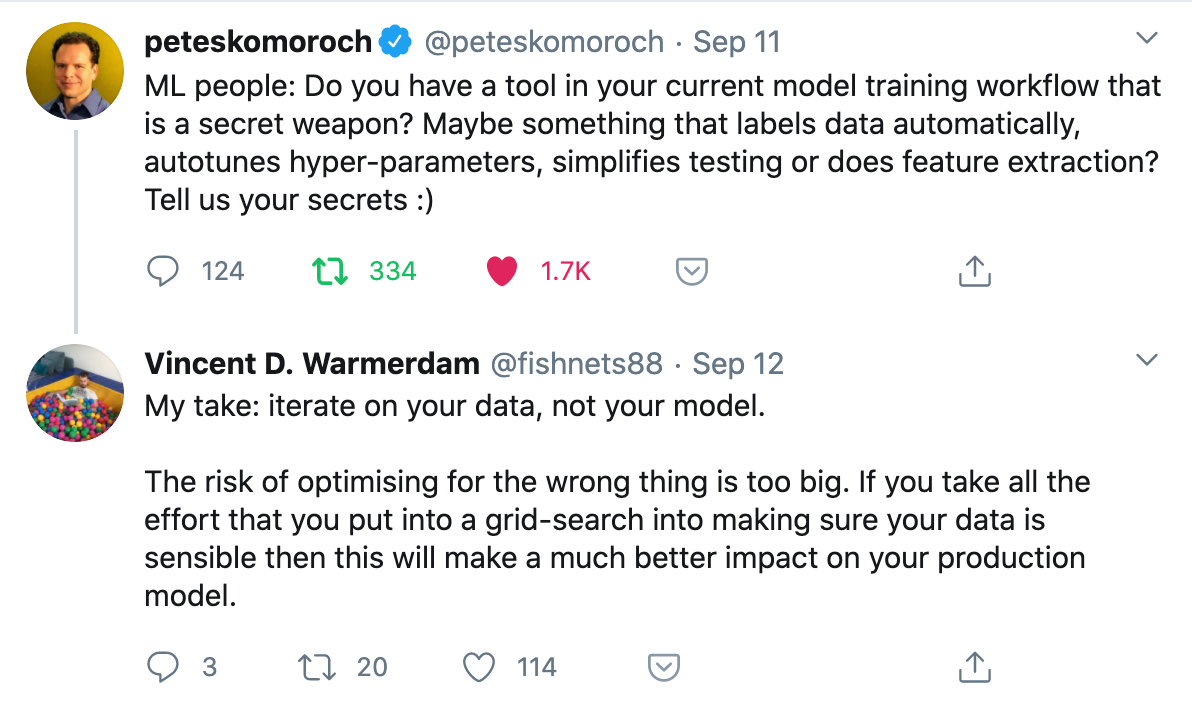

Twitter thread by [Pete Skomoroch](https://twitter.com/peteskomoroch) and [Vincent D. Warmerdam](https://twitter.com/fishnets88)

We’ve been trying really hard to avoid this cliché in this post, but here we go: Garbage in, garbage out. Thoroughly testing your input data is absolutely fundamental to ensuring your model output isn’t completely useless.

When developing a model

For the purpose of this article, we’ll consider feature engineering, model training, and model testing to all be part of the core model development process. During this often-iterative process, guardrails around the data transformation code and model output support data scientists so they can make changes in one place without potentially breaking things in others.

In classic DevOps tradition, continuous testing via CI/CD workflows quickly elicits any issues introduced by modifications to code. And to go even further, most software engineering teams require developers to not just test their code using existing tests, but also add new tests when creating new features. In the same way, we believe that running tests as well as writing new tests should be part of the ML model development process.

When running a model in production

As with all things ML Ops, a model running in production depends on both the code and the data it is fed in order to produce reliable results. Similar to the data ingestion stage, we need to secure the data input in order to avoid any unwanted issues stemming from either code changes or changes in the actual data. At the same time, we should also have some testing around the model output to ensure that it continues to meet our expectations. We occasionally hear from data teams that a faulty value in their model output had gone undetected for several weeks before anyone noticed (and in the worst case, they were alerted by their stakeholders before they detected the issue themselves).

Especially in an environment with black box ML models, establishing and maintaining standards for quality is crucial in order to trust the model output. In the same way, documenting the expected output of a model in a shared place can help data teams and stakeholders define and communicate “data contracts” in order to increase transparency and trust in ML pipelines.

What’s next?

By this point, it’s probably clear how data validation and documentation fit into ML Ops: namely by allowing you to implement tests against both your data and your code, at any stage in the ML Ops pipeline that we listed out above.

We believe that data testing and documentation are going to become one of the key focus areas of ML Ops in the near future, with teams moving away from “homegrown” data testing solutions to off-the-shelf packages and platforms that provide sufficient expressivity and connectivity to meet their specific needs and environments. Great Expectations is one such data validation and documentation framework that lets users specify what they expect from their data in simple, declarative statements. In the second blog post in this two-part series, we will go into more detail on how Great Expectations fits into ML Ops.