Growth in data and analysis in the last decade has grown exponentially, along with the use of machine learning and artificial intelligence in business. All this data can suffer from a host of different afflictions, including missing data, truncated data, invalid data, unexpected duplication, anomalous data, and more.

This leaves data scientists and engineers to grapple with data cleanliness, which costs organizations valuable time, money, and other resources. Machine learning and data quality both play a key role in solving this challenge.

The question is: How can organizations keep up with the volume of data they need to contend with, and manage the impact that low-quality data has on business? Here’s why improving data quality with machine learning matters and what you can do to make it a reality for your organization.

How Data Scientists Attempt to Manage These Data Problems

When you’re not feeding good quality data to your models, the results can be unpredictable and can range from mild inconvenience to outright catastrophic. One way data scientists and engineers tend to deal with bad or unpredictable data is to implement defensive programming techniques. But this adds a whole layer of complexity and maintenance.

Additionally, this solution is somewhat reactive as scientists and engineers can’t foresee or account for potential conditions in the constantly evolving data landscape. The costs of the knowledge gap organizations have because of this reactivity add up fast. Bad data costs U.S companies 9.7 million dollars on average, according to IBM.

That’s a multi-billion dollar problem when you multiply it by the number of companies facing data issues across the globe. So what can teams and companies do to get a sense of the current and historical state, understand the quality of their data and determine how to detect and test for them going forward?

A Better Way to Achieve Data Quality

That’s where Great Expectations (GX) comes in. The shared, open standard for data quality, Great Expectations helps data teams eliminate pipeline debt through data testing, documentation, and profiling. GX is a set of closely-related tools that allow data teams to make sense of the data in corpus, document it, and suggest automated tests against the known data. This way, you’re able to detect changes or anomalies in the data before it’s fed to ML models or any other analysis tooling.

Great Expectations helps teams and organizations to rein in data quality issues in a couple of different ways:

It provides a complete picture of data ranges and data types in data lakes via profiling and Data Docs

It profiles historical data in data warehouses, which enables you to:

Infer Expectations from it

Create checkpoints to validate the data

Create human-understandable documentation of said data (i.e. Data Docs)

To better understand where and how Great Expectations will help your company attain its data quality goals and where in the data pipeline it provides the most value, let us draw a hypothetical example.

Machine Learning and Data Quality: Making The Connection

Delivery Blitzing is a (hypothetical) last-mile parcel delivery company whose business model relies on smart-filling labor needs. Let’s assume, for the sake of illustration, that the ML model (or models) is “good enough” and behaves well with the data fed to it so far.

But an unexpected data condition can lead to a prediction error. This can cost the company thousands of dollars in excess drivers and loaders or not having enough resources to handle the demand. A key part of being a last-mile delivery service is that packages are received (inbound) not from people or businesses but from other delivery and logistics companies, so that will be the model’s key input data.

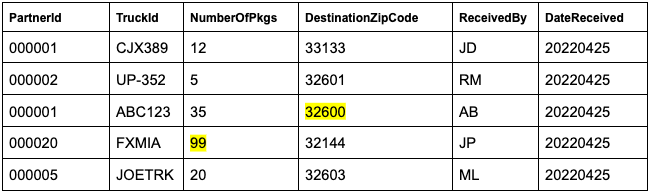

Let’s assume that the table where this data is stored looks as follows (but with thousands of records added daily):

Now, let’s assume the prediction engine takes into account things like the date, zip code, and the number of packages to make a decision on how many loaders and drivers to page. Let’s also assume there’s a limit on how many packages our little delivery trucks can handle.

Next, let’s imagine someone entered an input of 99 when it was actually 9. Or maybe they entered an invalid zip code. What will the prediction engine do? Will it schedule excess drivers and loaders due to the first mistake? In the second scenario, will it schedule any drivers and loaders at all given the invalid zip code? And it doesn't end there. What if, unexpectedly, some of those fields are empty? Or have negative numbers? Or are stored as literal strings? These are just a few examples of how bad data can rear its ugly head.

Delivery Blitzing may now be asking, what does the corpus of our historical data look like? What types of data are getting entered? And how do we make sure our daily data is clean before feeding it to the labor prediction engine?

This is where Great Expectations shines. With its robust set of data connectors, data profiling, and expectation suite generation, GX adds a layer of assurance and visibility.

Catching Bad Data To Improve Machine Learning

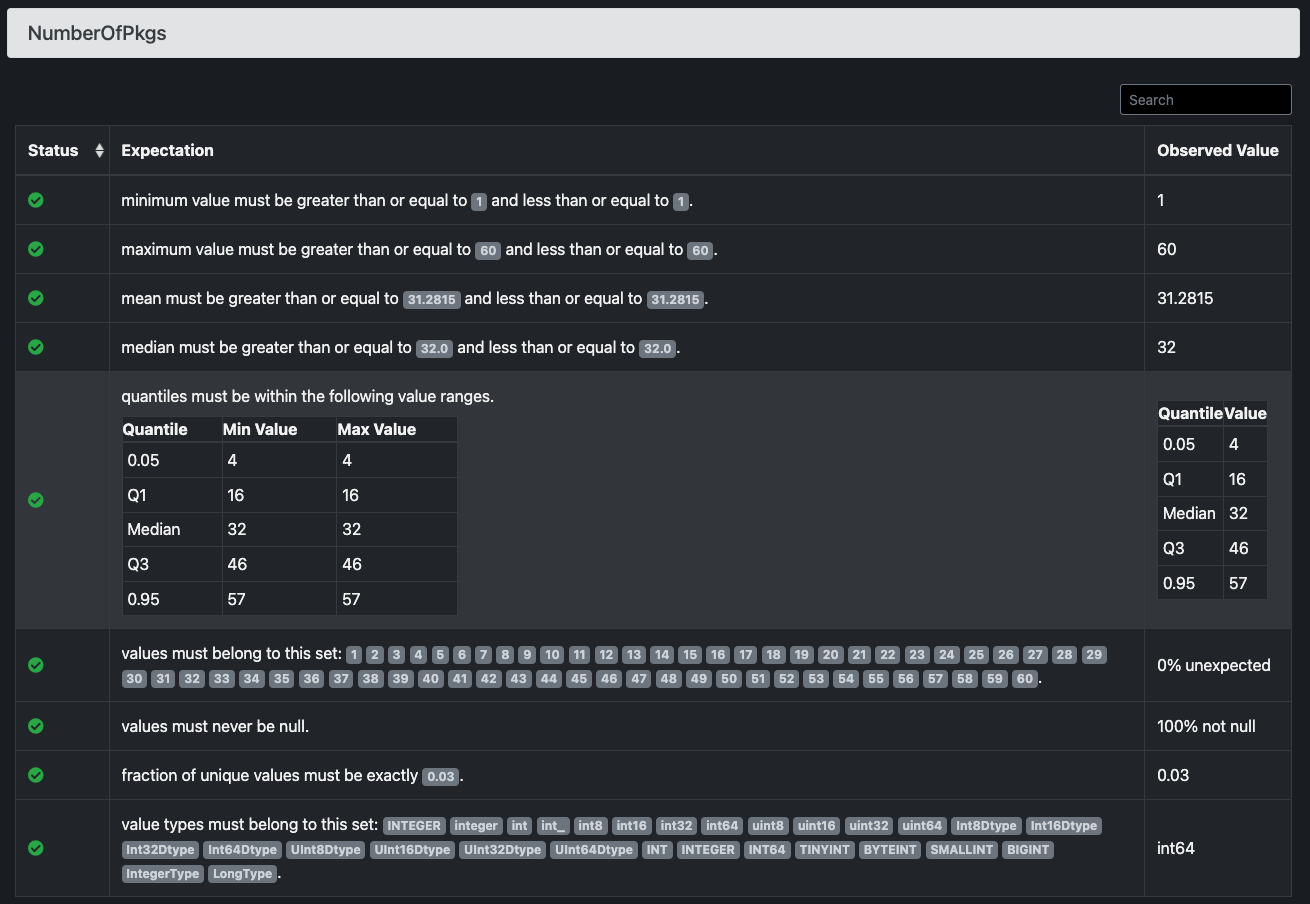

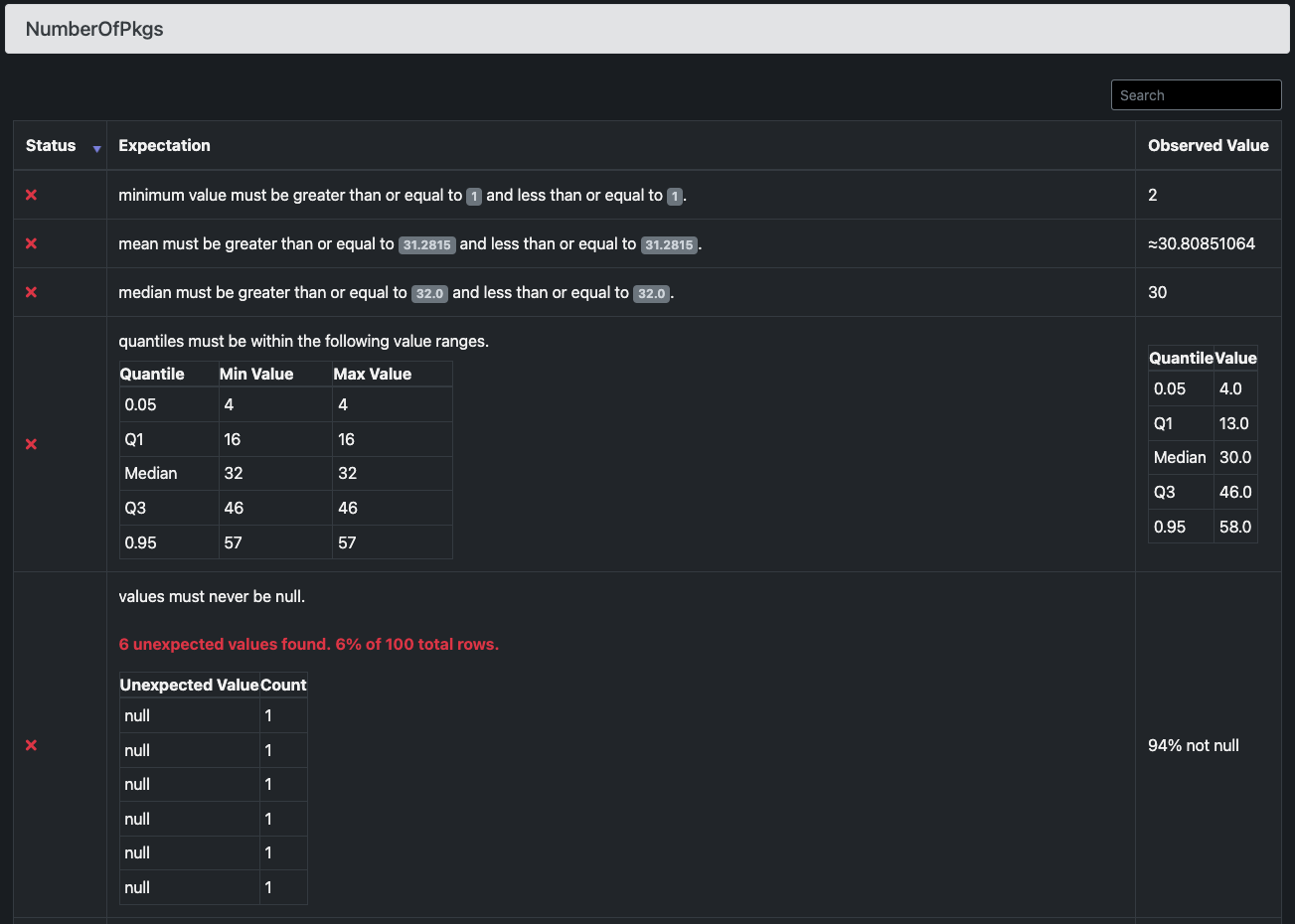

Once Great Expectations is set up and connected to the data warehouse where they keep their historical daily data, Delivery Blitzing will create a suite of expectations based on that data. If they're not quite sure what all of the data looks like and what patterns exist, GX can assist with that—either interactively based on a sample batch of the data (CLI) or fully automated via a built-in data profiler. Once the profiling and the validation against the data are complete, it will open up a web browser (locally) with the Datadocs and validation results for perusal.

Think of Data Docs as the source of truth about the state of data. With this information and the auto-generated Expectations Suite, Delivery Blitzing could run it on the new batch of daily data and see what the results are.

As you can see, the Expectations caught the bad data and brought attention to it with human-readable explanations.

As previously mentioned, Great Expectations provides the flexibility to add or remove Expectations to the Suite as deemed necessary. So, for example, if your team does not consider quantiles relevant or see the need to test for minimum values, you could easily remove them.

Once you and your team decide to include Great Expectations in your data stack, it’s a matter of where it fits in your workflow and pipeline. You could set it as a daily run before data is fed to the prediction model or you can run it as a step in your orchestrator every time a new batch of data is added to the historical daily data table or tables.

Ready to Unite Machine Learning and Data Quality?

Using Great Expectations is a great step for painting a picture of your data and quality. It articulates and presents the assumptions in the data and the parameters where it could present a problem in a way users can easily understand.

Want to see it for yourself? Now’s a great time to get started.